Zero Downtime Deployment

With Zero Downtime Deployment (ZDD), applications protected by Nevis, as well as configured authentication flows and most other Nevis functions are fully available during the deployment process. Most configuration changes and upgrades of the Nevis software components are possible without causing downtime. Users can continue to use the service without any interruption. When using Kubernetes deployment, ZDD is provided out-of-the-box by nevisAdmin 4: no additional configuration is necessary.

Observe Recommendations and Limitations, which also contain advice regarding zero downtime deployment.

How it works

ZDD is achieved by starting Nevis instances with the new configuration (or software) alongside the old instances. Traffic is switched to the new instances as soon as they are ready to accept requests. The session data is stored in remote session stores, this way it is not lost during the switch. For most Nevis upgrades, compatibility between the old and the new schema versions is provided, so both old and new instances can use the same database. To prevent interrupting ongoing connections, the old instances are kept alive for a period of time, until it can be guaranteed that all requests are handled by the new instances.

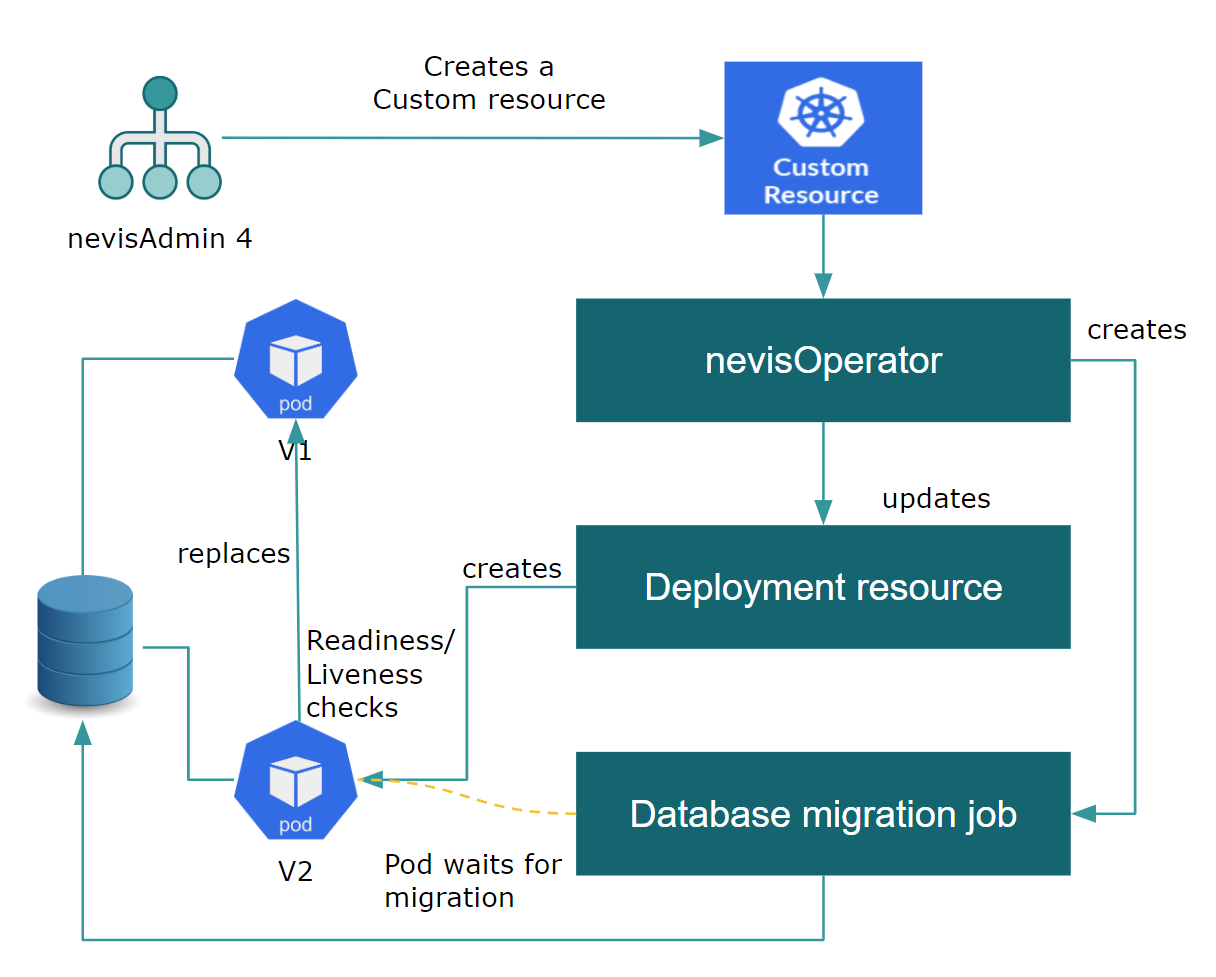

The following diagram and notes illustrate the process in more detail:

After the initial deployment from nevisAdmin 4, instances (Kubernetes pods) will be running for each Nevis component. These are depicted as V1 in the diagram.

When you deploy again, it updates one or more Nevis custom resources in the Kubernetes cluster.

- The custom resource for a component is updated only if there was a configuration change or software version change.

For each custom resource that is updated, nevisOperator will update the corresponding deployment resource. This, in turn, will trigger Kubernetes to start new instances of the component.

- In Kubernetes, each new instance corresponds to a new pod, depicted as V2.

- If it is necessary, based on the configuration, nevisOperator will also create a migration job to update the database to the newer schema.

If a migration job was scheduled, the newly created instance will wait for the job to complete.

The new instance will start and Kubernetes will check periodically if the instance is ready to accept requests.

When the instance is inreadystate, new incoming traffic is switched from the old instance to the new one.

- To prevent interrupting ongoing connections, the old instance is kept alive for a period of time, but it no longer accepts new connections.

As with the standard Kubernetes deployment, ZDD is done with a rolling update, which means that if multiple replicas are used, these will be replaced one by one with the new ones. This means that communication between old and new instances is possible.

To avoid "configuration mismatch errors", we recommend the following approach:

- Make sure that the new configuration (V2) is compatible with the old configuration (V1).

- If this is not possible, for example if you need to remove some settings, consider adding an intermediate step:

- Create a compatible intermediate configuration (V1.5) that adds the new settings but does not remove the old settings.

- Deploy V1.5 and wait for all V1 instances to finish.

- Remove the old settings to create the required V2 configuration.

- Deploy V2.