Developer guide

This guide is aimed at developers and integrators who want to modify the sample reports delivered with nevisReports, or create entirely new reports on Nevis data. In this guide developers and integrators will learn about:

- Elasticsearch basics,

- the document types used by nevisReports in Elasticsearch,

- logging custom properties to Elasticsearch,

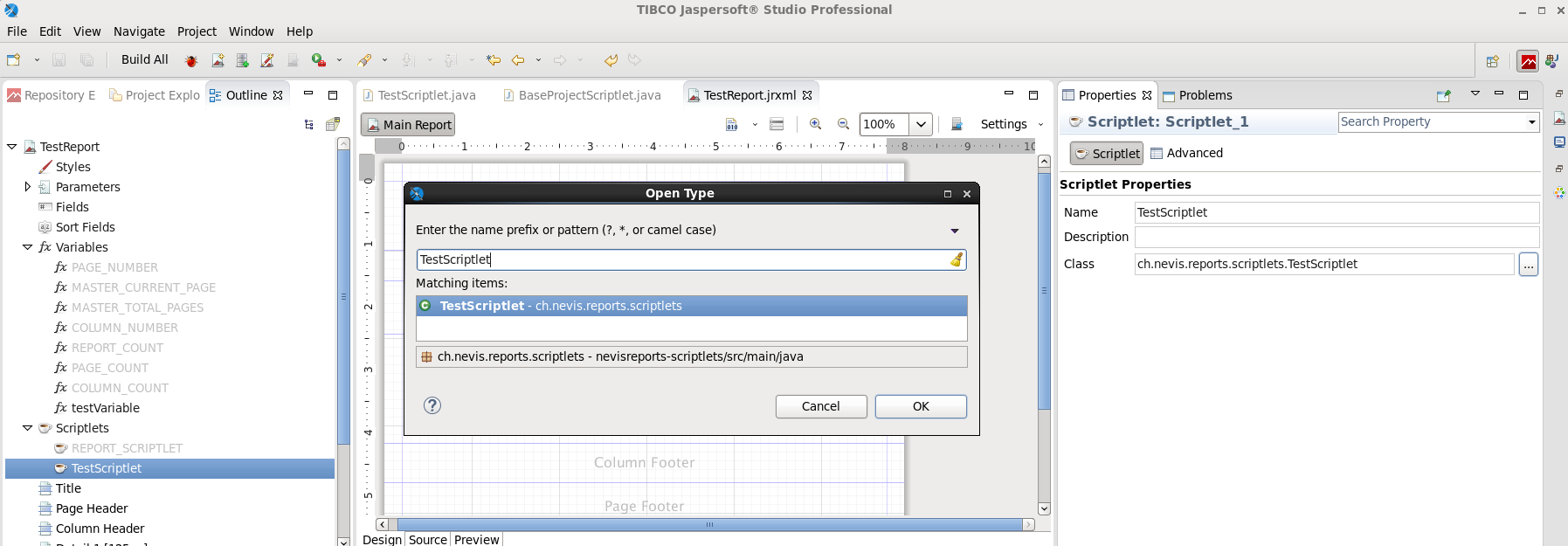

- using and setting up Jaspersoft Studio for developing reports,

- and using JasperReports to create reports with Elasticsearch as datasource.

For information about the nevisReports architecture and initial installation, refer to the nevisReports Reference Guide.

nevisReports comes with an embedded JasperReports Server license that allows creating reports only on data provided by Nevis components such as nevisProxy, nevisAuth and nevisIDM.

Additional reading

nevisReports is built on top of several well-known third-party components like Elasticsearch or JasperReports Server. All these third-party components come with a wide range of documentation, guides and tutorials. In case of doubt or problems we suggest you see the following documentation:

(https://www.elastic.co/guide/en/elasticsearch/reference/6.2/_basic_concepts.html),(https://www.elastic.co/guide/en/elasticsearch/reference/6.2/index.html),(https://www.elastic.co/learn) - including videos and books,(http://community.jaspersoft.com/wiki/jaspersoft-community-wiki-0),(https://www.youtube.com/playlist?list=PL5NudtWaQ9l4LEzDAU-DdBQAp21lLH00r)(https://community.jaspersoft.com/documentation/tibco-jaspersoft-studio-user-guide/v643/getting-started-jaspersoft-studio)

We also consider Google/Bing or Stackoverflow as valuable sources of information on specific JasperReports and Elasticsearch topics.

Elasticsearch Introduction

nevisReports relies on Elasticsearch for storing and querying the large amounts of log data that accumulate in typical Nevis installations. Elasticsearch is a NoSQL database with an architecture that makes it suitable for handling large volumes of log data.

Elasticsearch is used as the primary database for the following reasons:

- Schema-free data modeling: Depending on the target environment, different kinds of fields or event types may be required. Elasticsearch allows defining these field and event types on-the-fly and gracefully handles any missing data.

- Powerful indexing and queries: Elasticsearch automatically indexes data, which facilitates fast data aggregation and quick execution of free text queries.

- Designed for scale and resiliency: Elasticsearch allows the addition of nodes, i.e., (virtual) machines, as needed. It automatically synchronizes data with those nodes and distributes the query load across the nodes. These scalability features have a proven track record with big cloud service providers.

- Built-in support for log file management and analysis: Elasticsearch is part of the Elastic Stack, which is optimized to process and visualize any amount and kind of log data.

- Easy data maintenance: To free up space, Elasticsearch can easily partition and remove log data that is no longer needed.

- Straightforward installation: The initial Elasticsearch configuration is a good starting point for many environments.

- Open source license: The open source version of Elasticsearch is included with nevisReports without incurring additional license costs.

How Elasticsearch differs from SQL databases

Elasticsearch has some properties that make it fundamentally different from the various well-known SQL databases.

- Eventual consistency: When data is submitted to Elasticsearch, it is not immediately available for querying. Instead, there is a delay of typically five to ten seconds until the data is indexed and can be returned by queries.

- No "random" joins: To be able to efficiently query relations, you have to denormalize data or organize it in parent-child relationships before you store the data in the database.

- No elaborate constraints system: Elasticsearch is configured very permissively for nevisReports in regard to the storage of data types and properties. Its referential integrity and data constraint system is limited. For this reason, we recommend adding a data integrity test to your test suite that checks if data in the index adheres to certain rules.

- Java-based: It may be necessary to tune the Elasticsearch database in demanding setups to optimize its performance. Since Elasticsearch is based on Java, know-how of Java profiling tools and tuning options will be helpful when tuning is required.

When developing your own reporting use cases on top of Elasticsearch, design your solutions with the above properties in mind. Also, get familiar with Elasticsearch before starting development (see the next section).

Read the manuals

For more detailed information on Elasticsearch, read the excellent Elasticsearch manuals online. Below are some pointers to get you started on the query topic:

- Basic concepts - focus on the chapters "Index", "Type", and "Document"

- Exploring your data

- Search in Depth

- Search APIs/Search

The Elasticsearch schema for nevisReports is documented in the section: Elasticsearch Document Types, of this developer guide.

Example queries on nevisReports data

The examples below describe some typical use cases with index data provided by nevisReports.

The examples use many different features of Elasticsearch, so it is worth skimming through all of them.

This applies even if you already know SQL since Elasticsearch is quite different.

In a real-world reporting scenario you may have to combine approaches from several examples into one big query.

We recommend using the SoapUI tool to try out queries.

Breaking changes

The following breaking changes apply to existing nevisReports queries.

- The terms "filtered", "and", "or", "not" and "missing" have been deprecated and removed.

- Deprecation of file-based templates. You need to migrate to a new format for pre-registered templates.

- The "type" concept has been deprecated and removed.

The sample queries have been refactored to make them compatible with the latest Elasticsearch 6.2.x version. The removal of the "type" concept has been mitigated by the introduction of an additional custom field type in each document (see here).

There are many other breaking changes between the Elasticsearch versions 2.4.x to 6.2.x. If you want to find out more, refer to 5.x breaking changes and 6.x breaking changes.

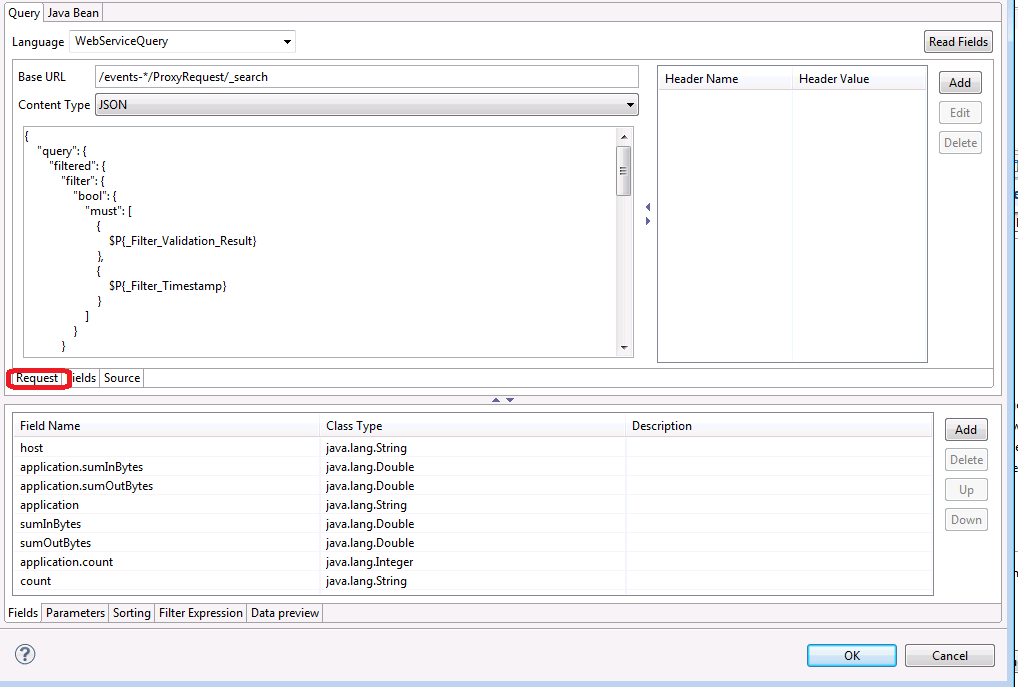

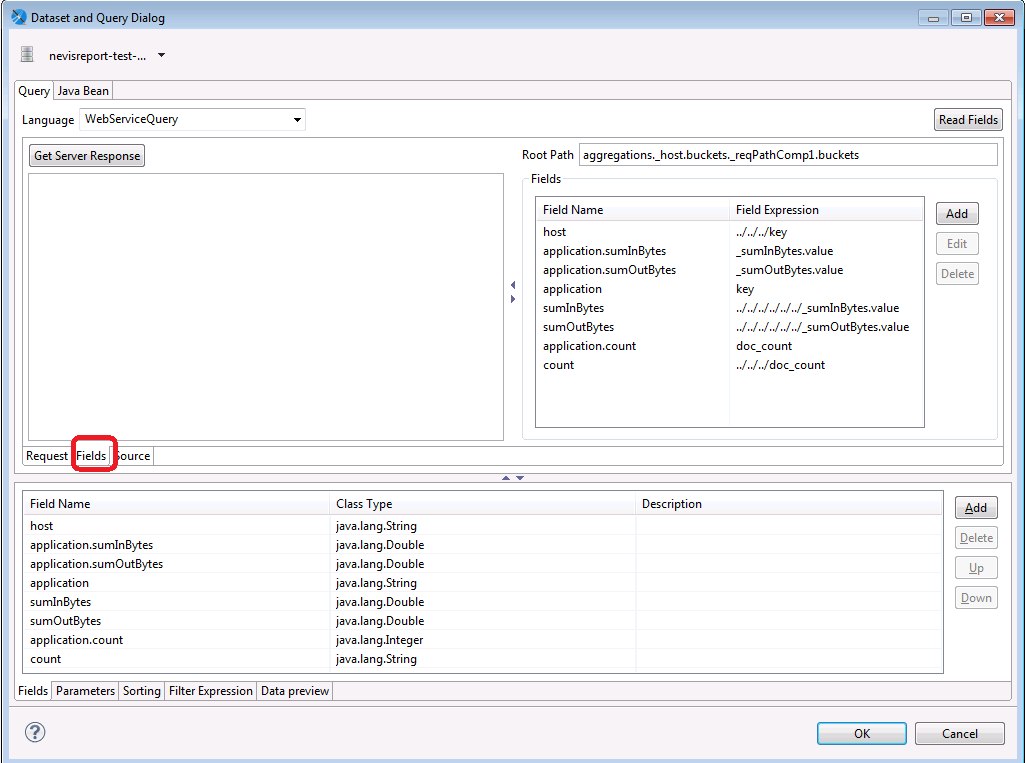

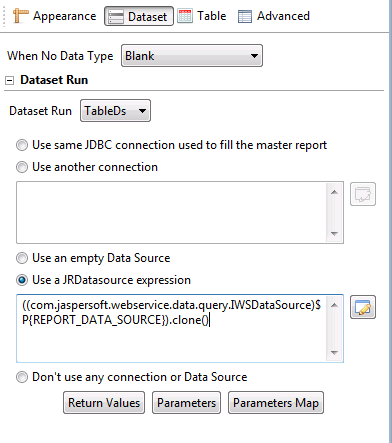

Aggregate nevisProxy requests by host name and application

Use case

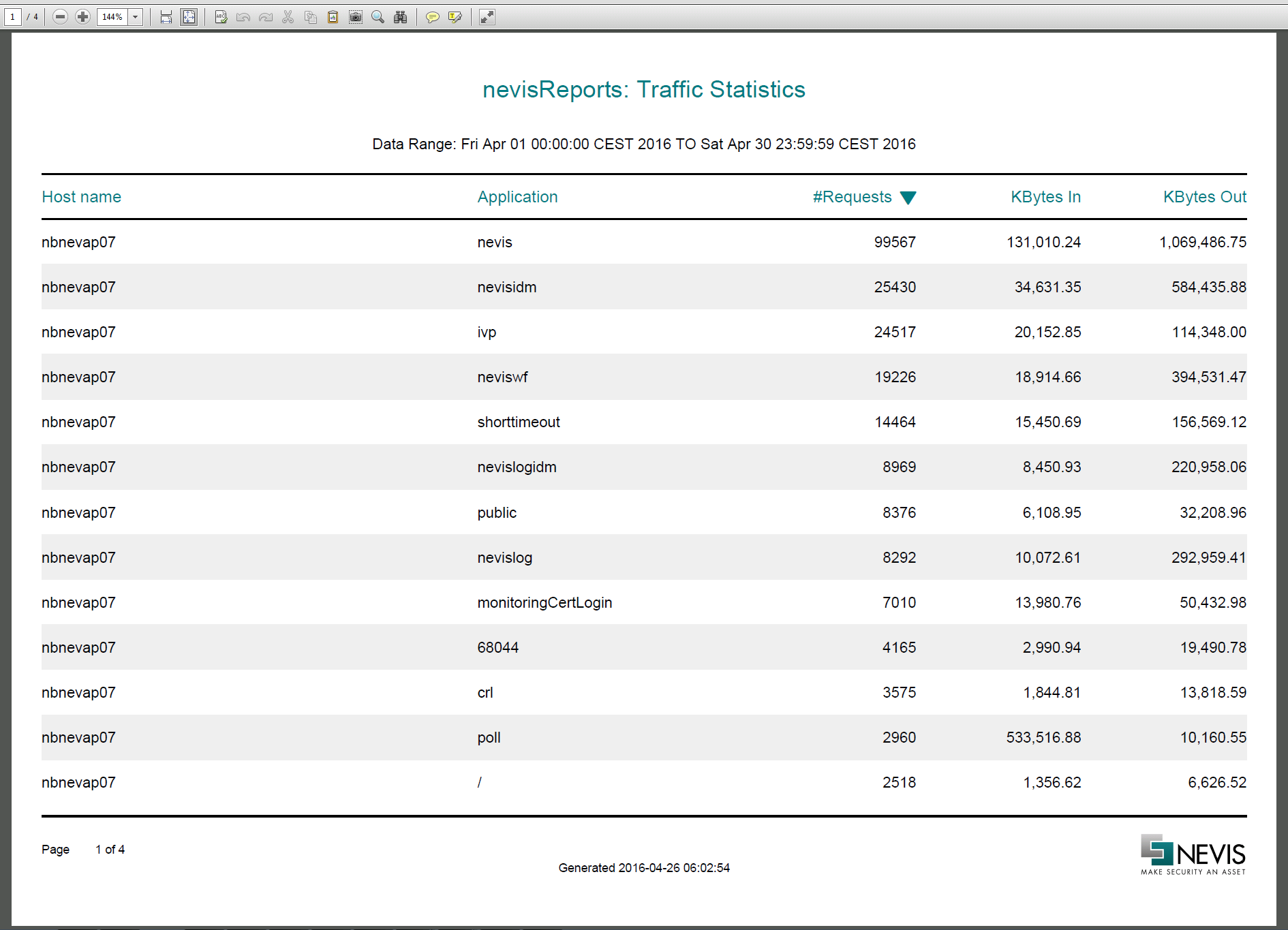

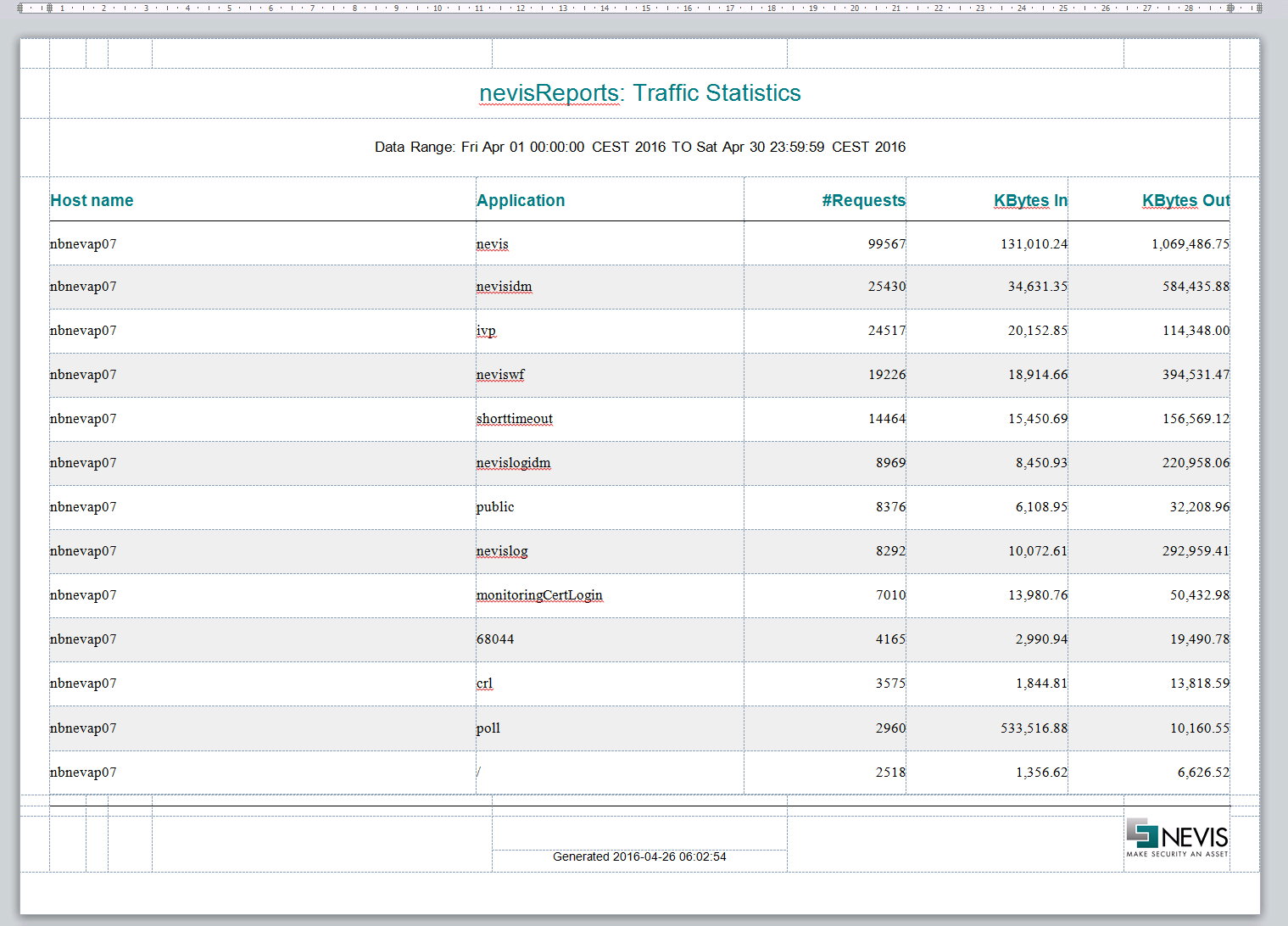

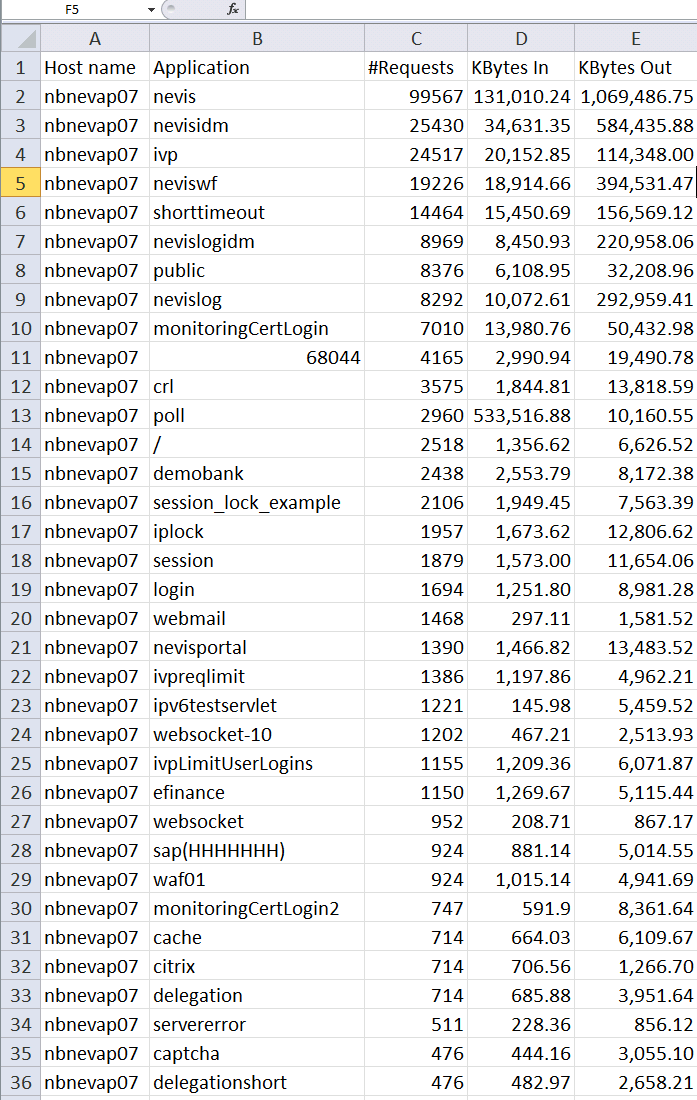

Create a report that shows the number of requests and total number of bytes received or sent for each external (virtual) host name / application pair.

Solution

Perform a query on the "ProxyRequest" type of document.

- Filter by time range.

Perform a nested bucket aggregation.

- First level bucket = serviceName.raw (represents host names).

- Second level bucket = reqPathComp1.raw (represents applications). Note that the applications must have unique context roots.

Do a metric aggregation at the required levels to get the number of request and bytes.For example:

_sumInBytes at serviceName level= total bytes received for this serviceName (see #1 in the sample output)_sumInBytes at reqPathComp1 level= total bytes received for this serviceName & reqPathComp1 (see #2 in the sample output).

Use **serviceName.raw in the query, because it contains the untokenized service name (e.g., "abc.com"). If instead you use serviceName, tokenized results will be returned, which is usually not desired (e.g., one for the token "abc" and one for "com"). The same applies to reqPathComp1.raw.

From Elasticsearch version 6.x onwards, the type concept is not supported anymore. To guarantee that the data model remains compatible with the model of nevisReports, we have added a custom field type in the data model. The samples below show how to solve the use case for both scenarios (before and after version 6.x of Elasticsearch).

POST /events-*/ProxyRequest/_search

{

"query": {

"filtered": {

"filter": {

"range": {

"@timestamp": {

"gte": "2015-07-31T14:40||/m",

"lte": "2015-07-31T14:40||/m"

}

}

}

}

},

"size": 0,

"aggs": {

"_serviceName": {

"terms": {

"field": "serviceName.raw"

},

"aggs": {

"_reqPathComp1": {

"terms": {

"field": "reqPathComp1.raw"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "inBytes"

}

},

"_sumOutBytes": {

"sum": {

"field": "outBytes"

}

}

}

}

}

},

"_sumInBytes": {

"sum": {

"field": "inBytes"

}

},

"_sumOutBytes": {

"sum": {

"field": "outBytes"

}

}

}

}

POST /events-*/_search

{

"query": {

"bool": {

"must": [

{

"term": {

"type": {

"value": "ProxyRequest"

}

}

},

{

"range": {

"@timestamp": {

"gte": "2015-07-31T14:40||/m",

"lte": "2015-07-31T14:40||/m"

}

}

}

]

}

},

"size": 0,

"aggs": {

"_serviceName": {

"terms": {

"field": "serviceName.raw"

},

"aggs": {

"_reqPathComp1": {

"terms": {

"field": "reqPathComp1.raw"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "inBytes"

}

},

"_sumOutBytes": {

"sum": {

"field": "outBytes"

}

}

}

}

}

},

"_sumInBytes": {

"sum": {

"field": "inBytes"

}

},

"_sumOutBytes": {

"sum": {

"field": "outBytes"

}

}

}

}

{

"took": 5,

"timed_out": false,

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"hits": {

"total": 37,

"max_score": 0,

"hits": []

},

"aggregations": {

"_sumInBytes": { #1

"value": 37000,

"value_as_string": "37000.0"

},

"_sumOutBytes": {

"value": 370000,

"value_as_string": "370000.0"

},

"_serviceName": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "adnlt115.zh.adnovum.ch",

"doc_count": 37,

"_reqPathComp1": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "nevislogrend",

"doc_count": 12,

"_sumInBytes": { #2

"value": 12000,

"value_as_string": "12000.0"

},

"_sumOutBytes": {

"value": 120000,

"value_as_string": "120000.0"

}

},

{

"key": "nevis",

"doc_count": 11,

"_sumInBytes": {

"value": 11000,

"value_as_string": "11000.0"

},

"_sumOutBytes": {

"value": 110000,

"value_as_string": "110000.0"

}

},

{

"key": "loginpages",

"doc_count": 8,

"_sumInBytes": {

"value": 8000,

"value_as_string": "8000.0"

},

"_sumOutBytes": {

"value": 80000,

"value_as_string": "80000.0"

}

},

{

"key": "/",

"doc_count": 6,

"_sumInBytes": {

"value": 6000,

"value_as_string": "6000.0"

},

"_sumOutBytes": {

"value": 60000,

"value_as_string": "60000.0"

}

}

]

}

}

]

}

}

}

Aggregate nevisProxy requests by nevisAuth sessions - join

Use case

Create a report that lists the start date, end date and duration of every session as well as request-based statistics per session, such as total requests and total bytes received/sent.

Solution

Perform a nested aggregation on AuthSession objects. Use the following criteria:

Unique session, via the built-in field "_uid".

This creates one bucket for every session.

The children of type ProxyRequest.

So for every session we look at the session's children.

The sum of ProxyRequest.inBytes and ProxyRequest.outBytes

Thus, we aggregate the inBytes and outBytes for the session's children.

Use top_hits to return the data (document) of each session. By using top_hits it is guaranteed that the system returns data for exactly one session.

- You can retrieve the total request count per session via the JSON path

*/_childrenProxyRequest/doc_count. - In SQL parlance, the following is done: the AuthSession "table" drives the query and joins in "rows" from the ProxyRequest table. In the query result some "columns" are aggregated, "grouped by" the AuthSession's _uid "column".

From Elasticsearch version 6.x onwards, the type concept is not supported anymore (see <http://www.elastic.co/guide/en/elasticsearch/reference/6.2/removal-of-types.html/>). To guarantee that the data model remains compatible with the model of nevisReports, we have added a custom field type in the data model.

The samples below show how to solve the use case for both scenarios (before and after version 6.x of Elasticsearch).

POST /events-*/AuthSession/_search

{

"size": 0,

"aggs": {

"_termsUid": {

"terms": {

"field": "_uid"

},

"aggs": {

"_childrenProxyRequest": {

"children": {

"type": "ProxyRequest"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "ProxyRequest.inBytes"

}

},

"_sumOutBytes": {

"sum": {

"field": "ProxyRequest.outBytes"

}

}

}

},

"_topHits": {

"top_hits": {}

}

}

}

}

}

POST /events-*/_search

{

"query": {

"bool": {

"must": [

{

"term": {

"type": {

"value": "AuthSession"

}

}

}

]

}

}

,

"size": 0,

"aggs": {

"_termsUid": {

"terms": {

"field": "_id"

},

"aggs": {

"_childrenProxyRequest": {

"children": {

"type": "ProxyRequest"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "ProxyRequest.inBytes"

}

},

"_sumOutBytes": {

"sum": {

"field": "ProxyRequest.outBytes"

}

}

}

},

"_topHits": {

"top_hits": {}

}

}

}

}

}

{

"took": 1,

"timed_out": false,

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"hits": {

"total": 2,

"max_score": 0,

"hits": []

},

"aggregations": {"_termsUid": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "AuthSession#1b3da8c0ff0ds1X3y7we3+ceoo6TQuewApwhOPngFE2OdLwkcVKZLm4=",

"doc_count": 1,

"_childrenProxyRequest": {

"doc_count": 3,

"_sumOutBytes": {"value": 30000},

"_sumInBytes": {"value": 3000}

},

"_topHits": {"hits": {

"total": 1,

"max_score": 1,

"hits": [ {

"_index": "events-2015.07",

"_type": "AuthSession",

"_id": "1b3da8c0ff0ds1X3y7we3+ceoo6TQuewApwhOPngFE2OdLwkcVKZLm4=",

"_score": 1,

"_source": {

"@version": "1",

"@timestamp": "2015-07-17T08:45:37.000Z",

"host": "adnlt275-vm",

"logVersion": "1",

"eventType": "authentication-completed",

"trID": "c0a80dff-6c6c-a8c0ff0d-14e9b30b648-00000000",

"sessionID": "YATMLfpS_IymQ7KptF3SIPA0nWSmlFuBaWpYKrXvtNg",

"client": {

"sessionID": "1b3da8c0ff0ds1X3y7we3+ceoo6TQuewApwhOPngFE2OdLwkcVKZLm4=",

"clientID": "adnlt115.zh.adnovum.ch",

"sslProtocol": "TLSv1.2",

"sslCipher": "DHE-RSA-AES128-SHA",

"sslClientDN": "cn=Lior,ou=MyNevisSetup,o=Adnvum Informatik AG",

"hostName": "adnlt115.zh.adnovum.ch",

"port": 443,

"clientIP": "192.168.13.255"

},

"agent": {

"userAgent": "Mozilla/5.0 (X11; Linux x86_64; rv:31.0) Gecko/20100101 Firefox/31.0",

"agentIP": "192.168.13.255",

"sslProtocol": "TLSv1.2",

"sslCipher": "DHE-RSA-AES128-SHA",

"resPath": "/nevis/test/logrend/",

"resQuery": null,

"reqPath": "/nevis/test/logrend/",

"reqQuery": "?login"

},

"sessionStartTimestamp": "2015-07-17T10:45:37.000+0200",

"loginID": "blabla",

"realm": "SSO_TEST",

"conversationId": "367751585188",

"userID": "foobar",

"roles": [

"auth.whatever",

"foo",

"bar"

],

"authLevel": "auth.whatever",

"eventTrail": [

{

"state": "TestUseridPassword",

"timestamp": "2015-07-17T10:44:52.000+0200",

"tech": "dummy",

"type": "username/password",

"detail": "lior"

},

{

"state": "IdmPasswordChange",

"timestamp": "2015-07-17T10:44:57.000+0200",

"tech": "nevisIDM",

"type": "mutation",

"detail": ""

}

],

"custom": {}

}

}]

}}

},

{

"key": "AuthSession#6c2da8c0ff0d6jANsKFcYgmn6m7w8hx/IfarPSkKO9Wd/+qUfAAJ+vc=",

"doc_count": 1,

"_childrenProxyRequest": {

"doc_count": 78,

"_sumOutBytes": {"value": 780000},

"_sumInBytes": {"value": 78000}

},

"_topHits": {"hits": {

"total": 1,

"max_score": 1,

"hits": [ {

"_index": "events-2015.07",

"_type": "AuthSession",

"_id": "6c2da8c0ff0d6jANsKFcYgmn6m7w8hx/IfarPSkKO9Wd/+qUfAAJ+vc=",

"_score": 1,

"_source": {

"@version": "1",

"@timestamp": "2015-07-17T08:45:37.000Z",

"host": "adnlt275-vm",

"logVersion": "1",

"eventType": "authentication-completed",

"trID": "c0a80dff-6c6c-a8c0ff0d-14e9b30b648-00000000",

"sessionID": "YATMLfpS_IymQ7KptF3SIPA0nWSmlFuBaWpYKrXvtNg",

"client": {

"sessionID": "6c2da8c0ff0d6jANsKFcYgmn6m7w8hx/IfarPSkKO9Wd/+qUfAAJ+vc=",

"clientID": "adnlt115.zh.adnovum.ch",

"sslProtocol": "TLSv1.2",

"sslCipher": "DHE-RSA-AES128-SHA",

"sslClientDN": "cn=Lior,ou=MyNevisSetup,o=Adnvum Informatik AG",

"hostName": "adnlt115.zh.adnovum.ch",

"port": 443,

"clientIP": "192.168.13.255"

},

"agent": {

"userAgent": "Mozilla/5.0 (X11; Linux x86_64; rv:31.0) Gecko/20100101 Firefox/31.0",

"agentIP": "192.168.13.255",

"sslProtocol": "TLSv1.2",

"sslCipher": "DHE-RSA-AES128-SHA",

"resPath": "/nevis/test/logrend/",

"resQuery": null,

"reqPath": "/nevis/test/logrend/",

"reqQuery": "?login"

},

"sessionStartTimestamp": "2015-07-17T10:45:37.000+0200",

"sessionEndTimestamp": "2015-07-17T10:55:37.000+0200",

"sessionEndReason": "session-expired",

"loginID": "albalb",

"realm": "SSO_TEST",

"conversationId": "367751585188",

"userID": "foobar",

"roles": [

"auth.whatever",

"foo",

"bar"

],

"authLevel": "auth.whatever",

"eventTrail": [

{

"state": "TestUseridPassword",

"timestamp": "2015-07-17T10:44:52.000+0200",

"tech": "dummy",

"type": "username/password",

"detail": "lior"

},

{

"state": "IdmPasswordChange",

"timestamp": "2015-07-17T10:44:57.000+0200",

"tech": "nevisIDM",

"type": "mutation",

"detail": ""

}

],

"custom": {}

}

}]

}}

}

]

}}

}

Alternative solutions

The following alternative solutions can be useful if you have other use cases, e.g., if you want to combine queries and aggregations, perform multiple unrelated aggregations, or return multiple document types.

POST /events*/_search

{

"query": {

"filtered": {

"filter": {

"type": {

"value": "AuthSession"

}

}

}

},

"size": 0,

"aggs": {

"_termsUid": {

"terms": {

"field": "_uid"

},

"aggs": {

"_childrenProxyRequest": {

"children": {

"type": "ProxyRequest"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "ProxyRequest.inBytes"

}

}

}

},

"_topHits": {

"top_hits": {}

}

}

}

}

}

POST /events*/_search

{

"query": {

"bool": {

"filter": {

"bool": {

"must": [{

"type": {

"value": "AuthSession"

}

}]

}

}

}

},

"size": 0,

"aggs": {

"_termsUid": {

"terms": {

"field": "_id"

},

"aggs": {

"_childrenProxyRequest": {

"children": {

"type": "ProxyRequest"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "ProxyRequest.inBytes"

}

}

}

},

"_topHits": {

"top_hits": {}

}

}

}

}

}

POST /events*/_search

{

"size": 0,

"aggs": {

"sessionType": {

"filter": {

"type": {

"value": "AuthSession"

}

},

"aggs": {

"_termsUid": {

"terms": {

"field": "_uid"

},

"aggs": {

"_childrenProxyRequest": {

"children": {

"type": "ProxyRequest"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "ProxyRequest.inBytes"

}

}

}

},

"_topHits": {

"top_hits": {}

}

}

}

}

}

}

}

POST /events*/_search

{

"size": 0,

"aggs": {

"sessionType": {

"filter": {

"bool": {

"must": [{

"type": {

"value": "AuthSession"

}

}]

}

},

"aggs": {

"_termsUid": {

"terms": {

"field": "_id"

},

"aggs": {

"_childrenProxyRequest": {

"children": {

"type": "ProxyRequest"

},

"aggs": {

"_sumInBytes": {

"sum": {

"field": "ProxyRequest.inBytes"

}

}

}

},

"_topHits": {

"top_hits": {}

}

}

}

}

}

}

}

List individual nevisProxy requests including nevisAuth session fields (join)

Use case

Create a report that lists executed HTTP requests, e.g., timestamps, IP addresses of the end user's client, requested hosts and URLs. For every request, include the user's unit name from the session.

In this use case, we expect the number of returned requests to be quite large (e.g., 10'000).

It is assumed that the unitDisplayName has been added to the session as a custom log property.

Solution

- Filter ProxyRequest objects as follows:

- Match the first or the second criterion below:

- Return results having a parent of type AuthSession:

- Match all such parents (i.e., do not filter on attributes of the parents).

- Return the parent as inner hit, but only include the field custom.unitDisplayName of the parent.

- Return results for which the parentfield does not exist.

- Sort by event @timestamp.

- Return the ProxyRequest fields @timestamp etc.

- Make sure that a maximum of 10'000 requests are returned (size).

- Use "_source" to specify which fields to include in the result. This will reduce the size of the response. Note that it is also possible to use "f**ields" instead. However, in that case you will get arrays. Moreover, it only works for non-nested fields.

- Replace the

notfilter by"query": { "match_all" : { } }. This will improve the performance. However, note that in this case an optimized query execution engine could decide to skip thehas_parentfilter. If that happens, the query will never return any session data.

From Elasticsearch version 6.x onwards, the type concept is not supported anymore (see <http://www.elastic.co/guide/en/elasticsearch/reference/6.2/removal-of-types.html/>). To guarantee that the data model remains compatible with the model of nevisReports, we have added a custom field type in the data model.

The samples below show how to solve the use case for both scenarios (before and after version 6.x of Elasticsearch).

POST /events-*/ProxyRequest/_search

{

"query": {

"filtered": {

"filter": {

"or": [

{

"has_parent" : {

"query": { "match_all" : { } },

"parent_type" : "AuthSession",

"inner_hits" : {

"_source": "custom.unitDisplayName"

}

}

},

{

"not": {

"filter": {

"exists": {

"field": "_parent"

}

}

}

}

]

}

}

},

"sort": { "@timestamp": { "order": "desc", "unmapped_type" : "date" } },

"_source": [ "@timestamp", "agentIP", "host", "reqPath", "reqQuery" ],

"size": 10000

}

POST /events-*/_search

{

"query":

{

"bool": {

"must": [

{

"term": {

"type": {

"value": "ProxyRequest"

}

}

}

],

"filter": [{

"bool": {

"should":[{

"has_parent" : {

"query": { "match_all" : { } },

"parent_type" : "AuthSession",

"inner_hits" : {

"_source": "custom.unitDisplayName"

}

}

}]

}

}],

"must_not": [

{

"bool": {

"filter": {

"exists": {

"field": "_parent"

}

}

}

}

]

}

},

"sort": { "@timestamp": { "order": "desc", "unmapped_type" : "date" }},

"_source": [ "@timestamp", "agentIP", "host", "reqPath", "reqQuery" ],

"size": 10000

}

Example results

Below you see the output of the first two hits. Note that the first hit has no parent session.

{

"took": 143,

"timed_out": false,

"_shards": {

"total": 2,

"successful": 2,

"failed": 0

},

"hits": {

"total": 256,

"max_score": null,

"hits": [

{

"_index": "events-2015.07",

"_type": "ProxyRequest",

"_id": "AU7fnsezDNO81WoMP6en",

"_score": null,

"_source": {

"@timestamp": "2015-07-30T14:08:14.000Z",

"host": "adnlt275-vm",

"agentIP": "192.168.13.255",

"reqQuery": "?login",

"reqPath": "/nevisidm/admin/"

},

"sort": [

1438265294000

],

"inner_hits": {

"AuthSession": {

"hits": {

"total": 1,

"max_score": 1,

"hits": [

{

"_index": "events-2015.07",

"_type": "AuthSession",

"_id": "484ba8c0ff0d0wmrjaPpkKWbjHiK5hs6BiQ7mn1+Nd/brTKVXTUerRY=",

"_score": 1,

"_source": {

"custom": {

"unitDisplayName": "foobar fighters (EN)"

}

}

}

]

}

}

}

},

{

"_index": "events-2015.07",

"_type": "ProxyRequest",

"_id": "AU7eTRFNXepvcNf9nPS-",

"_score": null,

"fields": {

"reqPath": [

"/nevisidm/admin/UserImport.do"

],

"clientIP": [

"192.168.15.123"

],

"@timestamp": [

"2015-07-17T09:54:08.000Z"

],

"host": [

"adnlt275-vm"

]

},

"sort": [

1437126848000

],

"inner_hits": {

"AuthSession": {

"hits": {

"total": 0,

"max_score": null,

"hits": []

}

}

}

}

...

]

}

}

Alternative solutions

The above query is fairly complex. If you only want requests that are part of a session, try the following approach:

POST /events*/ProxyRequest/_search

{

"query": {

"has_parent" : {

"query": { "match_all" : { } },

"parent_type" : "AuthSession",

"inner_hits" : {

"_source": "custom.userFullName"

}

}

},

"sort": { "@timestamp": { "order": "asc" }},

"size": 10000,

"fields": [ "@timestamp", "clientIP", "host", "reqPath", "reqQuery" ]

}

POST /events*/ProxyRequest/_search

{

"query": {

"has_parent" : {

"query": { "match_all" : { } },

"parent_type" : "AuthSession",

"inner_hits" : {

"_source": "custom.userFullName"

}

}

},

"sort": { "@timestamp": { "order": "asc" }},

"size": 10000,

"stored_fields": [ "@timestamp", "clientIP", "host", "reqPath", "reqQuery" ]

}

Show nevisProxy requests in a given time frame

Use case

Create a report that shows the number of logins per month during the last three months, for each user.

Solution

- Perform a filtered query:

- Term: type= ProxyRequest

- Filter on time range (for the sake of performance, we exclude aggregates outside the range).

- Do a bucket aggregation on userID.

- Perform a nested "date_histogram" aggregation in each bucket.

- Add

| |/Mto the range filter to round timestamps automatically, either to the start of the month (for "gte") or to the end of the month (for "lte"). This is helpful if the caller does not want to do any date math but just wants to transfer the from/to selection from the GUI to the filter. - To ensure that empty buckets in-between are returned, the "date_histogram" aggregation uses the "min_doc_count" option. "extended_bounds" guarantees that buckets start/end at the given months, even if the starting/ending bucket(s) is/are empty.

From Elasticsearch version 6.x onwards, the type concept is not supported anymore. To guarantee that the data model remains compatible with the model of nevisReports, we have added a custom field type in the data model. The samples below show how to solve the use case for both scenarios (before and after version 6.x of Elasticsearch).

POST /events-*/_search

{

"query": {

"filtered": {

"query": {

"term": {

"_type": "ProxyRequest"

}

},

"filter": {

"range": {

"@timestamp": {

"gte": "2015-05||/M",

"lte": "2015-07||/M"

}

}

}

}

},

"size": 0,

"aggs": {

"logins": {

"terms": {

"field": "userID"

},

"aggs": {

"_logins_over_time": {

"date_histogram": {

"field": "@timestamp",

"interval": "month",

"format": "yyyy-MM",

"min_doc_count": 0,

"extended_bounds": {

"min": "2015-05",

"max": "2015-07"

}

}

}

}

}

}

}

POST /events-*/_search

{

"query": {

"bool": {

"must": [

{

"term": {

"type": {

"value": "ProxyRequest"

}

}

}

],

"filter": {

"range": {

"@timestamp": {

"gte": "2015-05||/M",

"lte": "2015-07||/M"

}

}

}

}

},

"size": 0,

"aggs": {

"logins": {

"terms": {

"field": "userID.raw"

},

"aggs": {

"_logins_over_time": {

"date_histogram": {

"field": "@timestamp",

"interval": "month",

"format": "yyyy-MM",

"min_doc_count": 0,

"extended_bounds": {

"min": "2015-05",

"max": "2015-07"

}

}

}

}

}

}

}

{

...

"aggregations": {

"logins": {

"doc_count_error_upper_bound": 0,

"sum_other_doc_count": 0,

"buckets": [

{

"key": "foobar",

"doc_count": 1,

"_logins_over_time": {

"buckets": [

{

"key_as_string": "2015-05",

"key": 1430438400000,

"doc_count": 0

},

{

"key_as_string": "2015-06",

"key": 1433116800000,

"doc_count": 0

},

{

"key_as_string": "2015-07",

"key": 1435708800000,

"doc_count": 1

}

]

}

},

{

"key": "ich",

"doc_count": 1,

"_logins_over_time": {

"buckets": [

{

"key_as_string": "2015-05",

"key": 1430438400000,

"doc_count": 0

},

{

"key_as_string": "2015-06",

"key": 1433116800000,

"doc_count": 0

},

{

"key_as_string": "2015-07",

"key": 1435708800000,

"doc_count": 1

}

]

}

}

]

}

}

}

Elasticsearch tips and tricks

The following tips and tricks may help you improve your Elasticsearch queries:

Filter your document type inside the URL for shorter queries. For example, instead of simply posting your query to

/events-*/_search, add the more verbose"type": "ProxyRequest"filter.When sorting, specify unmapped_type. Thus, you can avoid errors in case an index does not (yet) contain any document of the given type (see this example)

If you have a "children" aggregation, and "filters" as a sub-aggregation, prefix the field names with a document type. For other sub-aggregations such as "terms", the document type prefix seems to be optional.

Document type prefix"aggs": {

"_childrenProxyRequest": {

"children": { <===== children aggregation

"type": "ProxyRequest"

},

"aggs": {

"_http": {

"filters": { <======== filters sub-aggregation

"filters": {

"_2xx": {

"range": {

"ProxyRequest.status": { <======== must have "ProxyRequest" document type prefix. If the sub-aggregation were to be "terms" instead of "filters", prefix is optional

"gte": 200,

"lte": 299

}

}

},From Elasticsearch 2.x, use the "bool" query as root query. The "bool" query supports filters.

Elasticsearch Document Types

Inside Elasticsearch, each log event is stored as a separate document. In some cases, there is the need for querying documents based on a relation, for example if you want to count all proxy requests per session. For these cases, Elasticsearch provides the parent-child relationship to relate documents.

Relations between document types

ProxyRequest and AuthEvent are children of their corresponding AuthSession. If an event does not belong to a session, the parent is not set.

ProxyRequest

The table below lists the Elasticsearch fields that are available for ProxyRequest documents. The following conventions are used:

- When a field name is in bold, then the field is generated from other fields to allow easier querying.

- Fields are of type string, unless stated otherwise in the description.

| Elasticsearch field | Example values | Optional | Description |

|---|---|---|---|

| type | "ProxyRequest" | This field holds the document type (mapping type).This field is available when running nevisReports with Elasticsearch 6.x and higher, to differentiate between the various document types used for nevisReports. Previously, Elasticsearch Mapping Types served the same purpose. | |

| _type | "doc" | This field always has "doc" as value. It is the default mapping type set by the Logstash. | |

| host | "nevapl13" | The log sending host as defined in the logstash-forwarder configuration. | |

| comp | "nevisproxy" | This field's value is always "nevisproxy". | |

| instance | "proxynb" | nevisProxy instance name. | |

| logVersion | "2" | The log version value is increased when the log format is changed in a way that must be detectable. | |

| @timestamp | 2015-06-30T15:30:29.000Z 2015-06-30T15:30:29.000+0100 | The value is of type date and includes time zone information. | |

| clientIP | "192.168.13.255" | The IP of the client host directly connecting to the nevisProxy server. In most setups this is the end user's IP. In some setups however, this can be the IP of another proxy or a load balancer instead of the end user's IP. | |

| xForwardedFor | ["192.168.13.252"] ["192.168.13.252", "2.3.4.5"] | optional | Refers to the HTTP header "X-Forwarded-For".Can contain multiple comma-separated IP addresses (more info). |

| agentIP | "192.168.13.255" | The value of the first IP inside the xFowardedFor field, or, if the field is empty, the clientIP. | |

| trID | "c0a80dff-2adc-a8c0ff0d-14e44a96556-00000006" | nevisProxy transaction ID: unique ID assigned to the current request. | |

| sessionID | "2600a8c0ff0d8zs FqXl1VfV0XQv7u73d WbvoDXVCw5kwZH11//jthf8=" | optional | nevisProxy session ID (also known as clID in other places). Groups multiple requests by the same agent. Note that there are spaces inserted in the example value for readability. In default nevisProxy installations this field may be empty for some requests (see section Reliable session ID logging in the nevisReports reference guide). |

| join_field | AuthSession (parent) "join_field": "AuthSession" ProxyRequest (child) "join_field": { "parent": "2600a8c0ff0d8zs FqXl1VfV0XQv7u73d WbvoDXVCw5kwZH11//jthf8=", "name": "ProxyRequest" } | The link to the AuthSession parent document of this ProxyRequest document.For more information on join_field, see join field. | |

| sslProtocol | "TLSv1.2" | optional | The SSL protocol. |

| sslCipher | "DHE-RSA-AES256-SHA" | optional | The SSL connection cipher. |

| sslClientDN | "CN=Nevis Demo Client/O=AdNovum Informatik AG/C=ch" | optional | The client's distinguished name (DN) in case of two-way SSL. |

| serviceName | "www-test.siven.ch" | The name of the proxy "service" (ServerName) that handled the HTTP request.(warning) The serviceName field is the same as the URL's host name as seen in the web browser only if a service exactly matching the URL's host name exists. When such a service does not exist, the serviceName will contain the name of a wildcard or default service and thus differ from the host name in the browser. | |

| port | 443 | The TCP port used to connect to the nevisProxy server. | |

| reqMethod | "GET" | The HTTP verb used in the request. | |

| reqPath | "/confluence/favicon.ico" | optional | The URL path without query string. |

| reqQuery | "details=none" | optional | The query string from the URL (without "?"). |

| reqPathComp1 | "confluence" | The first component of the URL path. This component usually corresponds to the application.The field is empty if the URL contains a slash only at the start. | |

| userAgent | "Mozilla/5.0 (X11; Linux x86_64; rv:31.0) Gecko/20100101 Firefox/31.0" | optional | The userAgent string in the HTTP header. |

| userAgentParsed | { nested object } | optional | A nested object of fields parsed from userAgent string using logstash useragent filter. See: Fields parsed from userAgent string |

| referer | "/index.html" | optional | The referer string in the HTTP header. |

| inContentType | "text/html" | optional | The content-type string in the HTTP header of the request. Refers to the content (MIME) type of the request body. |

| outContentType | "text/html" | optional | The content-type string in the HTTP header of the response. Refers to the content (MIME) type of the response body. |

| status | 200 | The response HTTP status code that is sent to the client. | |

| originalStatus | 404 | The original response HTTP status code before being altered by ErrorFilters. By default, this field is the same as the status field. If your nevisProxy setup contains ErrorFilters to change status code sent to the client, you should configure to log the original unaltered HTTP status in this field (see chapter: Logging originalHttpStatus in the nevisReports reference guide). Regardless of configuration this field always falls back to status if the original status is not available. | |

| dT | 258516 | The time to serve the response in µs (microseconds). | |

| dTF | [ 256 ] | Same as dT but excludes Apache processing time.; Expressed in ms (milliseconds).; dTF = dT - Apache time; Note, that dT and dTF include time taken to make back-end calls. | |

| dTProxy | 5 | The total time taken in nevisProxy without time taken by back ends. Expressed in ms (milliseconds). dTProxy = dT - sum of (dTB) | |

| dTB | [ 251 ][ 100, 151 ] | optional | Total time taken to make back-end call(s) in ms (milliseconds). Note: This field is an array. If there are multiple back-end calls, time taken for each call is represented as one entry. Entries are in the same order as the calls, i.e., if the first call took 100 ms and the second call took 151 ms, the value of this field is [ 100, 151 ]. |

| sCB | [ 200 ][ 200, 404 ] | optional | Response HTTP status codes received from each back-end call. Entries are in the same order as the calls. Example: The value [ 200 , 404 ] means that two back-end calls were made, with the first call resulting in 200 and the second call resulting in 404. |

| invS | ["ServletNevisWorkflow100"] ["NevisAuthConnectorLDAP"] ["NevisLogrendConnectoridm"] | optional | The name(s) of the servlet(s) being invoked. Entries are in the same order as the servlets' invocation. |

| application | "Zimbra" "Nevis (authentication)" "Nevis (others)" | The name of back-end content-provider application associated with this request. (See chapter Custom Logstash mapping configuration in the nevisReports reference guide.) If the call is routed to nevisAuth/nevisLogrend, the name "Nevis (authentication)" is assigned. If the call has no back-end requests and is handled entirely within nevisProxy, the name "Nevis (others)" is assigned. | |

| adrB | ["nevisreports-test-sg:8777"]["nevisreports-iam-sg:8991", "localhost:8988"] | optional | Address of the back end(s) composed of host name and port number. Entries are in the same order as the calls. |

| hostB | ["nevisreports-test-sg"]["nevisreports-iam-sg", "localhost"] | optional | Same as adrB but only the host name. |

| portB | ["8777"]["8991", "8988"] | optional | Same as adrB but only the port number. |

| ipB | ["10.21.208.86"]["10.21.208.86","127.0.0.1"] | optional | IP address of the back end(s). Entries are in the same order as the calls. |

| events | ["AU01"]["AU01", "P02"] | optional | nevisProxy events (see Appendix C in the nevisProxy reference guide). |

| userID | "00233453" | The user ID retrieved from the authentication back end, passed on from nevisAuth to nevisProxy inside the SecToken. Only available after successful authentication. | |

| loginID | "lior" | The login ID as entered by the user. After a successful authentication it is possible that the user login ID in the loginID field is overwritten by the nevisIDM login ID for the same session. Only available after a successful authentication. Can be empty in some setups. | |

| inBytes | 566 | The number of total bytes coming in (HTTP headers + body). Long type data, always >0. | |

| outBytes | 100 | The number of total bytes going out (HTTP headers + body). Long type data, always >0. | |

custom.<x> | any valid JSON value | optional | <x> can be any valid JSON attribute name (see also the special case for time stamps below).Elasticsearch derives the data type from the value (click here for more information). |

custom.<y>Timestamp | 2015-06-30T15:30:29.009+0100 | optional | Custom attribute name ending with Timestamp (date type, including time zone information). |

Fields parsed from userAgent string

The examples given are observed known values over a wide range of user agent strings. However, there is no guarantee that the underlying parser or the browser's userAgent strings will stay exactly the same.

For more examples: see a sample list of user agent strings and the parsed values here: userAgent-samples.json.

| Elasticsearch field | Example values | Optional | Description |

|---|---|---|---|

| userAgentParsed.name | - Chrome, Firefox, Safari, IE - Chrome Mobile, Mobile Safari, Firefox iOS - AppleMail, Thunderbird, Outlook - Apache-HttpClient, Java, CFNetwork - Python Requests, Other | Name of the user agent, e.g., the browser software. | |

| userAgentParsed.major | 47, 48, 49, 50 | optional | Major version of the user agent |

| userAgentParsed.minor | 0, 7, 9, 11, 12 | optional | Minor version of the user agent |

| userAgentParsed.patch | 1, 1599, 2228 | optional | Patch version of the user agent |

| userAgentParsed.os | Mac OS X 10.6, Windows 10, Red Hat, iOS 8.0, Android 6.0, Other | The operating system string (name with version string) | |

| userAgentParsed.os_name | Mac OS X, Windows 10, Red Hat, iOS, Android, Other | Name of the operating system (name without version string) | |

| userAgentParsed.os_major | 10 | optional | Major version of the operating system |

| userAgentParsed.os_minor | 6, 7, 8 | optional | Minor version of the operating system |

| userAgentParsed.device | - iPad, iPhone, iOS-Device, Samsung SM-G920F, Nexus 5X, Generic Feature Phone - Spider, Other | Type of device Note: Laptops and desktops are usually recognized as "Others" |

Adding custom fields

For information on adding custom fields, see section: Custom Log Properties.

When custom properties are added inside the log files, these properties will be indexed automatically as Elasticsearch fields, because nevisReports uses schema-less indexing.

AuthEvent

The following table lists fields that occur in AuthEvent documents. In some descriptions we refer to technical terms that are defined in the nevisAuth reference guide (for example, AuthState).

Some fields occur only when the log type is sessionEvent or acaaEvent (see the logType column in the table below).

Events of log type acaaEvent are only available starting from nevisReports 1.2.1.7 and with the nevisAdapt 1.0 release.

| Elasticsearch field | Example values | logType | Optional | Description |

|---|---|---|---|---|

| type | "AuthEvent" | This field holds the document type (mapping type).This field is available when running nevisReports with Elasticsearch 6.x and higher, to differentiate between the various document types used for nevisReports. Previously, Elasticsearch Mapping Types served the same purpose. | ||

| _type | "doc" | This field always has "doc" as value. It is the default mapping type set by the Logstash. | ||

| host | "nevapl13" | <any> | The log-sending host as defined in the Filebeat configuration. | |

| comp | "nevisauth" | <any> | This field's value is always "nevisauth". | |

| instance | "authnb" | <any> | The nevisAuth instance name. | |

| logVersion | "1" | <any> | The log version value is increased when the log format is changed in a way that must be detectable. | |

| @timestamp | 2015-06-30T15:30:29.000Z 2015-06-30T15:30:29.000+0100 | <any> | The value is of date type and includes time zone information. | |

| logType | "event" "sessionEvent" "acaaEvent" | <any> | The log type. The following log types are available: event: Stored as an AuthEvent document type. sessionEvent: Stored as AuthEvent and AuthSession document type. acaaEvent: Stored as an AuthEvent document type. | |

| eventType | "session-terminated" | <any> | The type of event. For all possible values, see the [List of event types] below. | |

| trID | "c0a80dff-6c6c-a8c0ff0d-14e9b30b648-00000000" | <any> | optional | The transaction ID (usually the same as the ProxyRequest.trID). |

| sessionID | "GdLf3s6nQjbfRFY-yi8mYOPGW25aJ9_kJujbypoDhCs" | <any> | optional | The nevisAuth session ID. This ID can change when a session becomes authenticated. |

| conversationID | "680026204835" | <any> | optional | The nevisAuth conversation ID. The conversation in this case is a sequence of requests and responses that forms one operation, e.g., an authentication or a step-up operation that renders forms. |

| client | { nested object } | sessionEvent acaaEvent | The client that talks to the nevisAuth server (usually nevisProxy). | |

| client.sessionID | "2fb3000ae5cdRHQtAH 3VObujYtYiUnfn0tul BVEZGYyv6hrD1hz1YGE=" | <any> | optional | The nevisProxy session ID (also known as clID in other places).Note that there are spaces inserted in the example value for readability. |

| client.clientID | "12441" | sessionEvent acaaEvent | The identification ID of the client (also known as actorId in other places). | |

| client.entryPoint | "nbnevap07.zh.adnovum.ch" | sessionEvent acaaEvent | The client entry point is the human-readable name provided by the client to identify itself. In nevisProxy configurations that are generated by nevisAdmin, the entryPoint value refers to the nevisProxy instance's host name. | |

| client.sslCipher | "TLS_DHE_RSA_WITH_AES_256_CBC_SHA" | sessionEvent | optional | The SSL connection cipher. |

| client.sslClientDN | "CN=nbcert,O=Adnovum Informatik AG,C=ch" | sessionEvent | optional | The distinguished name (DN), as presented in the client certificate. |

| client.clientIP | "10.0.205.229" | sessionEvent acaaEvent | The IP of the client host. | |

| agent | { nested object } | sessionEvent acaaEvent | The end user's client. The data is provided by the intermediate client above (usually nevisProxy). | |

| agent.userAgent | "Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.1.7) Gecko/20091221 Firefox/3.5.7" | sessionEvent acaaEvent | optional | The userAgent string in the HTTP header (same as ProxyRequest.userAgent). |

| agent.userAgentParsed | { nested object } | sessionEvent acaaEvent | optional | A nested object of fields parsed from userAgent string using logstash useragent filter. |

| agent.agentIP | "10.0.204.245" | sessionEvent acaaEvent | The IP of the end user's client if available (should be the same as the ProxyRequest.agentIP). | |

| agent.sslProtocol | "TLSv1" | sessionEvent | optional | The SSL protocol (same as ProxyRequest.sslProtocol). |

| agent.sslCipher | "DHE-RSA-AES256-SHA" | sessionEvent | optional | The SSL connection cipher (same as ProxyRequest.sslCipher). |

| agent.resPath | https://nbnevap07.zh.adnovum.ch/monitoringCertLogin/ | sessionEvent | The original URL (resource) that the agent (end user) wanted to access. | |

| agent.resQuery | "a=b" | sessionEvent | optional | |

| agent.resPathComp1 | "monitoringCertLogin""/" | sessionEvent | ||

| agent.reqPath | https://nbnevap07.zh.adnovum.ch/monitoringCertLogin/ | sessionEvent | The URL of the current agent request that caused the nevisAuth back end to be invoked (same as ProxyRequest.reqPath). | |

| agent.reqQuery | "login" | sessionEvent | optional | The query string part of the request (without "?", same as ProxyRequest.reqQuery). |

| hostName | "nbnevap07.zh.adnovum.ch" | sessionEventacaaEvent | The host name of the internet protocol (IP) interface that received the request. | |

| port | 8991 | sessionEventacaaEvent | The port number (long type) of the internet protocol (IP) interface that received the request. | |

| sessionStartTimestamp | 2015-10-08T17:40:26.044+0200 | sessionEvent | The start time (date type) of the current session. | |

| sessionEndTimestamp | 2015-10-08T17:45:43.127+0200 | sessionEvent | optional | The end time (date type) of the current session. |

| sessionEndReason | "terminated-by-client" | sessionEvent | optional | The reason why the session was finished. For all possible values, see the List of session end reasons, below. |

| loginID | "user1" "CN=nbcert,O=Adnovum Informatik AG,C=ch" | sessionEvent acaaEvent | This field contains the intended (but unverified) user identity (name) which is usually visible to the end user. It is set by an AuthState before successful authentication. - In the case of a classic client certificate login, this is the same value as ProxyRequest.sslClientDN. - In the case of a form-based login, this is the value that the user entered into the User Name field. In many setups, this user identity name differs from the login ID defined in nevisIDM and the user ID in the userID field (see below). | |

| userID | "user1" "1260" | sessionEvent acaaEvent | optional | The principal name as provided by the authentication back end. It is set by an AuthState during the authentication process. This user ID is used to pass on the identity, e.g., via a SecToken. This ID cannot be used to check whether a session is authenticated or not since it is also set on failed authentication attempts (e.g., by the nevisIDM IdmPasswordVerifyState). |

| authLevel | "auth.weak" "auth.strong" "STRONG" | sessionEvent acaaEvent | optional | The authentication strength of the session after a successful login or step-up (e.g., if the user was authenticated by a simple password only, the authentication strength is set to weak). For possible values, see the authLevel attribute of the AuthState and ResultCond elements in your nevisAuth configuration. |

| roles | ["nevisIdm", "nevisIdm.SelfAdmin", "auth.strong"] | sessionEvent | optional | The roles received by the user (based on role information from the authentication back end).The field usually contains the current authLevel name as well. |

| realm | "SSO" | sessionEvent acaaEvent | The name of the SSO authentication domain.For possible names, see the Domain element in your nevisAuth configuration. | |

| language | "en" | sessionEvent | The natural language (as ISO code) in which nevisAuth GUIs are rendered. The value is selected from configured languages based on the user agent's language header or, in rare cases, based on the nevisIDM-defined language. | |

| eventTrail | [ nested array ] | sessionEvent acaaEvent | The audit events that can be generated by AuthStates and are collected during the session. | |

| eventTrail[].stateName | "SSOIdmCertificate" | sessionEvent acaaEvent | The name of the AuthState in the configuration. | |

| eventTrail[].stateClass | "ch.nevis.esauth.auth.states.x509.X509Login" | sessionEvent acaaEvent | The class of the AuthState. | |

| eventTrail[].timestamp | 2015-10-08T17:40:25.939+0200 | sessionEvent acaaEvent | The event timestamp (date type). It includes time zone information. | |

| eventTrail[].tech | "X509" "Kerberos" "LDAP" | sessionEvent acaaEvent | A short text that indicates the technology used in the operation. | |

| eventTrail[].type | "token" | sessionEvent acaaEvent | The authentication type of the event (trail). For all possible authentication types, see the: List of event trail authentication types, below. | |

| eventTrail[].detail | "CN=nbcert, O=Adnovum Informatik AG, C=ch" | sessionEvent acaaEvent | optional | AuthState-dependent information. |

| acaa | { nested object } | acaaEvent | The detailed information from the ACAA AuthState. Only available in pre-releases of nevisReports and nevisAuth. | |

| acaa.devicerecognition | { nested object } | acaaEvent | The detailed information from the devicerecognition module. | |

| acaa.geolocation | { nested object } | acaaEvent | The detailed information from the geolocation module. | |

| acaa.timeofday | { nested object } | acaaEvent | The detailed information from the timeofday module. | |

| acaa.... | TODO | |||

custom.<x> | <any> | optional | <x> can be any valid JSON attribute name (see also the special case for timestamps below).Elasticsearch derives the data type from the value. | |

custom.<y>Timestamp | 2015-06-30T15:30:29.009+0100 | <any> | optional | Custom attribute name ending with Timestamp (date type, including time zone information). |

customEvent.<x> | <any> | optional | <x> can be any valid JSON attribute name (see also the special case for timestamps below)Elasticsearch derives the data type from the value. | |

customEvent.<y>Timestamp | 2015-06-30T15:30:29.009+0100 | <any> | optional | Custom attribute name ending with Timestamp (date type, including time zone information). |

List of event types

The event type describes why/when an event was logged.

The table below lists all possible event types.

| Event type | Reason for logging the event |

|---|---|

| authenticate-completed | A completed authentication operation (the AUTH_DONE status has been reached). |

| stepup-completed | A completed step-up operation (the AUTH_DONE status has been reached). Traditionally, a successfully completed step-up operation leads to an increased authentication level (authLevel). Note that a step-up may also be used for re-authentication, e.g., to trigger a password change operation. In this case, the authentication level is not increased. |

| logout-completed | A completed logout operation requested by the user (usually via logout URL). |

| session-terminated | A session that is killed out of the context of a user request. This can happen for various reasons (see the List of session end reasons). |

<operation>-completed | A completed operation (leading to the AUTH_DONE status). |

<operation>-aborted | An aborted operation (which caused an AUTH_ERROR status). |

| acaaRiskScore | A risk scoring event within the ACAA AuthState. Usually happens before AUTH_DONE or AUTH_ERROR. |

| acaaPersist | A risk persist event when the user successfully completed a login (AUTH_DONE) and risk scores are persisted. |

List of session end reasons

The following values can occur in the sessionEndReason field when a session finishes:

| Session end reason | Description |

|---|---|

| expired | The SessionReaper has removed a timed-out session. |

| terminated-by-client | A kill call was made from an external client (such as nevisProxy reaching a session time-out). |

| terminated-by-flow | The session was killed by AuthState logic (e.g., through response.setInvalidateSession()). |

| aborted | The authentication operation failed (that is, the AUTH_ERROR status was triggered by the configuration or a Java exception). |

| redirected | A user request resulted in an AUTH_REDIRECT status. |

| logout | A user-triggered logout has been completed. |

| stateless-domain | The domain configuration signals stateless authentication support. |

| stateless-request | The client signals stateless authentication support. |

List of event trail authentication types

The table below lists the event trail authentication types.

This documentation is based on the nevisAuth Java class ch.nevis.esauth.auth.engine.AuthMarker. For further information, see the nevisAuth reference guide, chapter "Auth Markers", and the Marker row in the summary table included for each AuthState.

| Event trail type | Description |

|---|---|

| username/password | Classic user name/password authentication. |

| token | Authentication based on a verifiable (signed, encrypted, etc.) unique token held by the user. For example: x509, kerberos tokens |

| challenge/response | The authentication is based on a challenge/response procedure. In this case, the user is presented with a challenge to which he must respond correctly. For example: vasco, rastercards, CAPTCHA |

| extern | Federated authentication where an external, foreign service must be trusted, without being able to verify the correctness of the service. For example: SOAP- and HTTP-based integration of external authentication services. |

| federation | Federated authentication by a well-known external service and the usage of secure message transfers. For example: SAML, WS-Fed |

| one-time-password | Authentication by transmitting a one-time secret via a different communication channel. For example: TAN |

| selection | This is not an authentication step/type but refers to the selection of authentication-relevant options. For example: user profiles |

| mutation | This is not an authentication step/type but refers to a change of permanent authentication data. For example: password change |

| none | This is not an authentication step/type but a significant event in the session. |

AuthSession

Elasticsearch creates an AuthSession document for every user session. The document is updated when there is an update of the session information or when the session is over.

Behind the scenes, the following happens: Whenever Elasticsearch receives a nevisAuth JSON event with the sessionEvent log type, it creates/updates an AuthSession document. It then adds the same event as an AuthEvent document to keep a full history of such events. See the AuthEvent field table for information about available fields.

From nevisReports 4.0 onwards, document and mapping types are set in different fields. The document type is set in the type field, whereas the _type field is always set to the "doc" mapping type. In case of an AuthSession document, the document type set in the type field must be "AuthSession".

Joins

In Elasticsearch, the AuthSession document is the parent of one or more ProxyRequest or AuthEvent documents. This can be used, for example, to include session information when reporting on proxy requests or to aggregate proxy requests by session. For examples, see section: Elasticsearch Introduction.

In the standard configuration, nevisReports switches the index files every month (for performance and maintenance reasons). Thus, if a user session starts in month 1 and ends in month 2, there will be two AuthSession documents, one in each month's index. Because in this case the session ends in month 2, the AuthSession document of the first month's index will not yet have the sessionEndTimestamp set. In reports you can handle this special case as follows:

- Especially when displaying sessions across month boundaries, you may want to include the actual session only once. To accomplish this, require a non-null sessionEndTimestamp value in the query. The matching AuthSession objects will contain the final session information.

- If you are joining AuthSession documents to other documents via the parent-child relationship, the joins will only include documents inside the index (month) where the AuthSession document resides. In such cases, it may be preferable to report on both sessions so that all available information can be included in the report.

AuditEvent

The table below lists the Elasticsearch fields that are available for AuditEvent documents. They are created by nevisIDM only at the moment.

From an audit relevant event there are three main blocks at work:

- The actor, the system or user that executed the operation.

- The subject, in case of events defined under the "Audit events when subject user must be added" section.

- The subject should show the user's LATEST state. (for e.g. when the subject user's name is changed, the new name shall be logged).

- If the subject is being deleted in the event, the "old" state must be logged.

- The eventData, which contains:

- newValues, which lists all changed fields with their new values if there's any.

- oldValues, which lists all changed fields with theis old values if there's any.

- updatedState, which lists all fields with their actual values.

| Elasticsearch Field Name | Nested fields | Nested fields | Type | Importance* | Example Value | Description |

|---|---|---|---|---|---|---|

| logVersion | number | mandatory | 1 | The log version value is increased when the log format is changed in a way that must be detectable. | ||

| timestamp | string | mandatory | 2015-06-30T15:30:29.000Z or 2015-06-30T15:30:29.000+0100 | The value is of date type and includes time zone information - ISO-8601 format (used by most JSON parsers). | ||

| source | string | mandatory | [email protected] | The source of the audit entry in the form [machine]@[hostname] The machine is always "nevisidm", collected from log file path by Filebeat and Logstash. The hostname is the log sending host as defined in the Filebeat configuration. | ||

| eventType | string | mandatory | USER_CREATE | The nevisIDM audit log event type, also simply called Event in the text-based nevisIDM audit logs. Event type: (see AuditEventTypes and Fields) | ||

| trID | string | optional | 7f000001.3e07.0a14d672.00000024 | General transaction ID: unique ID assigned to the current request. For events triggered by the system, this value is empty. | ||

| sessionID | string | optional | 24ab80993abefbTKRgWyC5ZkUk3tNpHwwIXJ2Lj+CKPXg/mL/zB7tfk\= | nevisIDM clID (also known as session ID in other places). For events generated by the system these values are empty. | ||

| client: optional | sessionId | string | optional | G2YfYeyQv_x3L0lwW9u8bV5Z | The nevisIDM session ID, also called sessID in the text-based nevisIDM audit logs. SSO session ID of the caller (comes from sectoken). | |

| entryPoint | string | optional | nevisidm-test.zh.adnovum.ch | The entry point for audit events directly hitting the nevisIDM web application, this is also called EntryID. SSO entry ID (the nevisProxy instance) of the caller (comes from sectoken). | ||

| actor: mandatory | firstName | string | optional | Bruce | The first name of the actor as it is stored in nevisIDM. User.firstName | |

| lastName | string | optional | Lee | The last name of the actor as it is stored in nevisIDM. User.name | ||

| string | optional | [email protected] | The email address of the actor as it is stored in nevisIDM. User.email | |||

| loginId | string | mandatory | theonlybrucelee | The login ID of the actor, as identified by nevisIDM. User.loginId | ||

| extId | string | mandatory | 100 | The user ID (also know as ext ID in other places) of the actor, as identified by nevisIDM. User.extid | ||

| isTechnicalUser | boolean | mandatory | true or false (boolean) | Indicates whether the actor is a technical user according to nevisIDM or not. User.isTechnicalUser | ||

| client: mandatory | extId | string | mandatory | apClient1 | The client ID of the actor, as identified by nevisIDM.Client.extId | |

| name | string | mandatory | Default | The client name of the actor, as identified by nevisIDM. Client.clientName | ||

| unit: this is the unit of the profile which the actor have selected at login If no unit is assigned to the user, it is optional. | profileExtId | string | mandatory | prof1234 | The external ID of the user's profile which is assigned to this unit, as identified by nevisIDM. | |

| extId | string | mandatory | authtestunit002 | The unit id of the unit, as identified by nevisIDM. Unit.extId | ||

| name | string | mandatory | UnitForAuthTest2 | The unit name of the unit, as identified by nevisIDM. Unit.name | ||

| hierarchyName | string | optional | /authtestunit001/authtestunit002 | The hierarchical unit name of the unit, as identified by nevisIDM. Unit.hName | ||

| subject: optional, check the table below for exact cases | firstName | string | optional | Alan | The first name of the subject user as it is stored in nevisIDM. User.firstName | |

| lastName | string | optional | Rickman | The last name of the subject user as it is stored in nevisIDM. User.name | ||

| string | optional | [email protected] | The email address of the subject user as it is stored in nevisIDM. User.email | |||

| loginId | string | mandatory | alarick | The login ID of the subject user , as identified by nevisIDM.User.loginId | ||

| extId | string | mandatory | 1201y | The user ID (also know as ext ID in other places) of the subject user , as identified by nevisIDM. User.extid | ||

| isTechnicalUser | boolean | mandatory | false | Indicates whether the subject user is a technical user according to nevisIDM or not. User.isTechnicalUser | ||

| client: mandatory | extId | string | mandatory | apClient1 | The client ID of the subject user , as identified by nevisIDM.Client.extId | |

| name | string | mandatory | Default | The client name of the subject user, as identified by nevisIDM. Client.clientName | ||

| units [ARRAY]: lists all units of the subject user - If no unit is assigned to the user, return an empty array. - The array must be sorted by hName in an ascending order (if it's set) | profileExtId | string | mandatory | prof1234 | The external ID of the subject user's profile which is assigned to this unit, as identified by nevisIDM. | |

| extId | string | mandatory | authtestunit002 | The unit id of the unit, as identified by nevisIDM. Unit.extId | ||

| name | string | mandatory | UnitForAuthTest2 | The unit name of the unit, as identified by nevisIDM. Unit.name | ||

| hierarchyName | string | optional | /authtestunit001/authtestunit002 | The hierarchical unit name of the unit, as identified by nevisIDM. Unit.hName | ||

| eventData: Theoretically optional, but makes no sense in practice to log an empty event. | newValues | object | optional | "number": 1000002267,"boolean": true,"string":"blablabla1234" | Only fields with updated values are listed here. (Note: If it's a DELETE event, there are no new values.) Zero or more fields of the affected entity depending on the EventType (see AuditEventTypes and Fields). If a field is a Date, it should have the same format as the "timestamp" field has. | |

| oldValues | object | optional | "number": 1000002267,"boolean": true,"string":"blablabla1234" | The updated fields are listed here with their values before the update. (Note: If it's a CREATE event, there are no old values.) Zero or more fields of the affected entity depending on the EventType (see AuditEventTypes and Fields). If a field is a Date, it should have the same format as the "timestamp" field has. | ||

| updatedState | object | optional | "number": 1000002267,"boolean": true,"string":"blablabla1234" | All fields are listed here with the values after the update. (Note: If it's a DELETE event, there is no updated state.) Zero or more fields of the affected entity depending on the EventType (see AuditEventTypes and Fields). If a field is a Date, it should have the same format as the "timestamp" field has. |

Importance was set based on what is set as nullable in the database and what is not, and also based on the remarks of Lukas Westermann.

Audit events when subject user must be added

Subject users are for example the owner of a credential, an owner of a profile, authorization etc.

| Audit event type | Remark |

|---|---|

| USER_CREATE, USER_MODIFY, USER_DELETE | - |

| PROFILE_CREATE, PROFILE_MODIFY, PROFILE_DELETE | - |

| CREDENTIAL_CREATE, CREDENTIAL_MODIFY, CREDENTIAL_DELETE | - |

| CREDENTIAL_LOGIN_INFO_CREATE, CREDENTIAL_LOGIN_INFO_MODIFY, CREDENTIAL_LOGIN_INFO_DELETE | - |

| PROPERTY_VALUE_CREATE, PROPERTY_VALUE_MODIFY, PROPERTY_VALUE_DELETE | only for credential, user, profile and authorization |

| CERTIFICATE_INFO_CREATE, CERTIFICATE_INFO_MODIFY, CERTIFICATE_INFO_DELETE | - |

| MOBILE_SIGNATURE_CREATE, MOBILE_SIGNATURE_MODIFY, MOBILE_SIGNATURE_DELETE | - |

| SAML_FEDERATION_CREATE, SAML_FEDERATION_MODIFY, SAML_FEDERATION_DELETE | - |

| OATH_CREATE, OATH_MODIFY, OATH_DELETE | - |

| PERSONAL_ANSWER_CREATE, PERSONAL_ANSWER_MODIFY, PERSONAL_ANSWER_DELETE | - |

| GET_PDF_FOR_CREDENTIAL | - |

| AUTHORIZATION_CREATE, AUTHORIZATION_DELETE, AUTHORIZATION_MODIFY | - |

| AUTHORIZATION_UNIT_CREATE, AUTHORIZATION_UNIT_DELETE | - |

| AUTHORIZATION_APPL_CREATE, AUTHORIZATION_APPL_DELETE | - |

| AUTHORIZATION_CLIENT_CREATE, AUTHORIZATION_CLIENT_DELETE | - |

| SELFADMIN_MOBILE_CHANGE | - |

| ENTERPRISE_AUTHORIZATION_CREATE, ENTERPRISE_AUTHORIZATION_DELETE | - |

| AUTHORIZATION_ENTERPRISE_ROLE_CREATE, AUTHORIZATION_ENTERPRISE_ROLE_DELETE | - |

Custom Log Properties

By defining custom log properties, integrators can add environment-specific information to the nevisProxy and nevisAuth event logs.

Property naming rules

Before defining new properties, consider that Elasticsearch works reliably only if properties have unique (path) names across the nevisReports "events" indexes. The reason is two-fold:

- Within the same [document type], a field with the same name must always have the same value type.

- Some functionality requires consistent field names/types across all document types (more information).

To avoid such issues:

- Add properties only within the

custom { }orcustomEvent { }blocks.- This ensures that there is no conflict with any standard properties, since Elasticsearch internally stores properties under the full path name.

- The properties within each block MUST have different names if they have different value types (even if they appear in different document types).

- For example, in nevisProxy, you add a custom property for the login's ID (numeric), and in nevisAuth for the login's name (string). Name these properties

custom.loginIDandcustom.loginName, respectively, instead of using custom.login for both.

- For example, in nevisProxy, you add a custom property for the login's ID (numeric), and in nevisAuth for the login's name (string). Name these properties

nevisProxy custom properties

Standard nevisProxy events can be enriched with environment-specific information. This information will appear inside the custom { } block of the log events. The following can be configured:

- Property name: a valid JSON property name not containing dots.

- Property value: a supported Apache mod_log_config directive (starting with the %-sign). Some useful examples:

%{<X>}e: <X>is an environment variable supported by Apache, its modules or nevisProxy extensions.- See section DelegationFilter in the nevisProxy Reference Guide for background information about the "ENV source". Note that listed variable names may not always match those that can be used inside a mod_log_config directive.

- For additional variables, see the column "Apache Environment Variable" in the table following NProxyOp, in the section Debugging of the nevisProxy Reference Guide.

%{<IN-H>}i: <IN-H>is an HTTP request header.%{<OUT-H>}o: <OUT-H>is an HTTP response header.

- JSON formatted: whether or not the value is valid JSON by itself.

- When not ticked: the value is not (always) valid JSON, so it will be quoted when logged. Use this for:

- Any request or response header, since any header can be missing causing Apache to log the string - (minus sign, must be quoted in JSON).

- Environment variables that contain strings.

- A directive such as %b that can evaluate to - (minus sign, must be quoted in JSON).

- When ticked: the value itself is valid JSON and must be logged unquoted. Used for advanced use cases only. Use this for:

- A (custom) environment variable that always contains valid JSON and is never missing.

- A directive such as %B that is guaranteed to evaluate to a number.

- When not ticked: the value is not (always) valid JSON, so it will be quoted when logged. Use this for:

nevisAdmin configuration

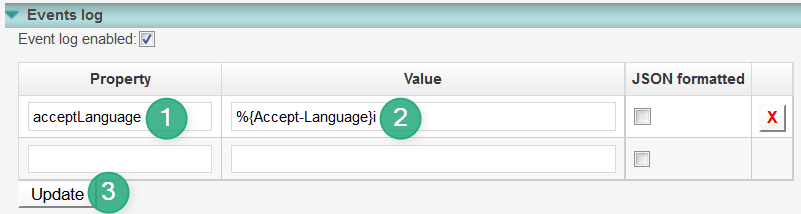

To configure custom properties, do the following inside the Events log section (its location inside the nevisAdmin GUI is explained in the section Hosts running nevisProxy):

- Enter the property in the Property field.

- Enter the value in the Value field (usually the value starts with %).

- Click Update, then commit and deploy (not shown).

After deploying the configuration, the file navajo.xml will be updated. See the section Manual configuration below if you want to have a look at the generated logging directive.

Manual configuration

- Open the file /var/opt/nevisproxy/$PROXY_INSTANCE_NAME/conf/navajo.xml.

- Look up the CustomLogs element.

- Between the curly brackets following \"custom\": add your properties in valid JSON syntax, for example:

\"acceptLanguage\":\"%{Accept-Language}i\", \"responseSize\":\"%B\"

- Restart the nevisProxy instance.

Never add properties at the root level (i.e., outside of the custom map) since this may cause trouble with the Elasticsearch field mapping, or with future nevisReports releases.nevisAuth custom properties

If you use a version of nevisAuth > 4.21.10.0, then check chapter "Customize event content" in the nevisAuth Reference Guide on how to write custom properties. For all versions of nevisAuth <= 4.21.10.0, follow the next steps to set custom properties.

Standard nevisAuth events can be enriched with environment-specific information. This information will appear inside the custom { } block of the log events.

To add custom properties:

- Edit the vmargs.conf file.

- In nevisAdmin, go to the File Manager of the nevisAuth instance and edit the file there.

- When using manual configuration, you can find the file here: /var/opt/nevisauth/$AUTH_INSTANCE_NAME/conf/vmargs.conf.

- Add the desired property, usually at the end of the file:

- Add a line in this form: -Devent.log.custom.field.CUSTOM_PROPERTY_NAME=VALUE

- Replace CUSTOM_PROPERTY_NAME with the name of your property following the naming rules defined at the beginning of this section.

- Replace VALUE with a variable or EL expression that evaluates to the needed value. See the tip below for syntax requirements. By default, the value will be quoted and JSON-escaped.

- If the value must appear without quoting, for example because it always evaluates to a number or because it is a JSON array or map, add the following additional line for the property: -Devent.log.custom.field.CUSTOM_PROPERTY_NAME.format=JSON

How to define expressions inside vmargs.conf

- Refer to the nevisAuth reference guide, chapters "Variable Expressions" and "Java EL Expressions", for more information on the language.

- The dollar ($) character starting the expression must be escaped to prevent interpretation by the shell.

- The hash character can not be used for starting the EL expression because it is interpreted as the start of a comment.

- After the open curly bracket

{character, add a space to force expressions to be evaluated as EL expressions. The space prevents evaluation as a variable expression. The following table lists some example property definitions.

| Example property definition in vmargs.conf | Example value | Description |

|---|---|---|

-Devent.log.custom.field.userExtId= "\${sess:ch.nevis.idm.User.extId}" | 1002 | The nevisIDM external ID of the logged-in user |

-Devent.log.custom.field.userEmail= "\${sess:ch.nevis.idm.User.email}" | "[email protected]" | The e-mail address of the logged-in user. |

-Devent.log.custom.field.userLastName= "\${sess:ch.nevis.idm.User.name}" | "Doe" | The last name of the logged-in user. |

-Devent.log.custom.field.userGivenName= "\${sess:ch.nevis.idm.User.firstName}" | "John" | The first name of the logged-in user. |