Persistence backend

Based on the deployment type, nevisMeta supports two database types as persistence backend: MariaDB (relational database) and Couchbase (non-relational database).

| Deployment type | Supported persistence backend |

|---|---|

| Standalone | Couchbase, MariaDB |

| Glassfish | Couchbase |

MariaDB persistence backend

Stable. Recommended for production systems.

Relational databases have a proven track record as a reliable choice to persist structured data. To be in line with the software stack of Nevis components, MariaDB Server is supported as a persistence backend starting with nevisMeta 1.5.0.0.

Setup of MariaDB for nevisMeta

This chapter does not guide you through the setup of a MariaDB server. If you need step-by-step instructions to install a new MariaDB server, refer to the MariaDB Server documentation.

It is strongly recommended that you back up your current nevisMeta MariaDB database (if any) before you create a new one.

Creating a new database for nevisMeta

The user in the following command must have the right to create a new database.

Run the following command:

nevismeta <instance name> init-mariadb <options>

The following options <options> are available:

| Option | Default | Description | Example |

|---|---|---|---|

| -h | database.connection.url from the file nevismeta.properties | Defines the database host.You can have a single host or multiple hosts (separate by ","). | -h localhost |

| -P | 3306 | Defines the database port. | -P 1234 |

| -u | root | Defines the database root user to create the nevismeta DB and user. | -u customUser |

| -p | false | Flag to enable/disable a password-protected connection to the DB. The value must be "true" or "false". | -p true |

The command will create an empty MariaDB database with the following users:

| User | Local host | Current machine hostname |

|---|---|---|

<schema owner> | <schema owner>@localhost | <schema owner>@<current machine name> |

<schema user> | <schema user>@localhost | <schema user>@<current machine name> |

For example, the schema owner is umet01, the schema user is umet02, and current machine hostname is iam. In this case, the following users will be created:

- umet01@localhost

- umet02@localhost

- umet01@iam

- umet02@iam

The setup information is taken from the nevismeta.properties file, as documented in Deployment types:

| Property | Setup Information |

|---|---|

| database.connection.url | DB host |

| database.connection.schema.owner.username | DB schema owner username |

| database.connection.schema.owner.password | DB schema owner password |

| database.connection.username | DB application user username |

| database.connection.password | DB application user password |

Tables and other constraints are configured later via Flyway when starting a nevisMeta instance (see also further below, section Database migration with MariaDB.

Overview of database users

To separate concerns, the database is managed by several database users. The creation of the users is explained below.

| Username | Purpose | Required permissions | Created when |

|---|---|---|---|

| umet01 | A schema owner has full control over the schema objects (tables, views, etc.),- can execute DDL,- is used for database migration only (see also Database migration with MariaDB] | ||

| umet02 | An application user has read and write access to all objects in the schema,- cannot execute DDL,- is used for daily operations. | SELECT, INSERT, DELETE, UPDATE, EXECUTE | During |

| replication_user | Account used by the slave node to log into the master node | REPLICATION SLAVE to be able to replicate data | During |

| binarylog_user | Account used for binary log management | SUPER permission to be able to purge the binary logs | During |

Configuring nevisMeta to use MariaDB as persistence backend

You configure the use of MariaDB as persistence backend in the file nevismeta.properties (see the following table):

database.type

Example: mariadb

Defines the persistence backend type. To use the MariaDB server as persistent backend, set this property to mariadb.

database.connection.url

Example: jdbc:mariadb:sequential//host-db1:3306,host-db2:3306/nevismeta*or*jdbc:mariadb://host-db1:3306/nevismeta

Define the connection string with fully support MariaDB syntax, because this value is handed over to the MariaDB JDBC driver as connection string without any changes.For more information on the MariaDB jdbc driver, see the documentation at:(https://mariadb.com/kb/en/library/about-mariadb-connector-j/)

database.connection.username

Example: umet02

The name of the application user who has access to the schema set in the property database.connection.url. For details, see Overview of database users.

database.connection.password

Example: umet02

The password of the user set in the property database.connection.username.

database.connection.schema.owner.username

Example: umet01

The name of the user who is the owner of the schema specified in database.connection.url. For details, see Overview of database users.

database.connection.schema.owner.password

Example: umet01

The password of the user set in the property database.connection.schema.owner.username.

Configuring outage resilience on MariaDB

This chapter is about resilience towards outages of database nodes, which differs from resilience towards failures of nevisMeta instances. To achieve resilience towards failures of nevisMeta instances, two nevisMeta instance addresses must be configured in nevisProxy. Such a configuration enables failover to the second nevisMeta instance if nevisProxy cannot connect to the first nevisMeta instance. For more information, see the nevisProxy reference guide (InetAddress parameter of the HttpConnectorServlet).

Resilience to database instance outage

This chapter explains how to set up data replication with two MariaDB instances. The advantages of this solution are:

- Resilience towards outage of one of the MariaDB instances or its host (e.g., in case of a power outage).

- Resilience towards a failure of the network infrastructure between nevisMeta and one of the MariaDB instances (e.g., in case of a data center outage).

The above statements hold only as long as at least one of the two MariaDB instances is running and reachable. Simultaneous outage of both database instances hinders nevisMeta from running properly.

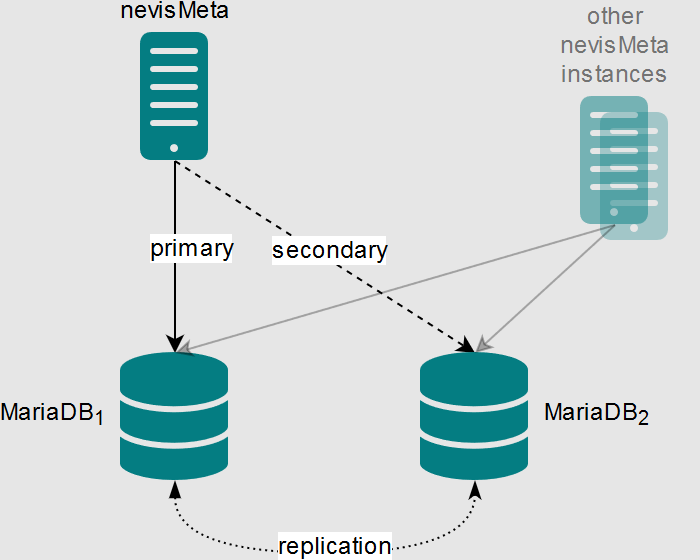

Components involved

This section describes the setup of a fail-safe persistence layer for nevisMeta using two MariaDB database hosts with data replication. This setup is tested with MariaDB version 10.3.7.

For a discussion of the nevisProxy connection to the nevisMeta configuration, see the nevisProxy reference guide (the HttpConnectorServlet).

The database hosts are called host-db1 and host-db2 in this guide. The replication is enabled in both directions (master/master setup), so that each host is a replication master and a replication slave at the same time. The replication is enabled for a single database specified in the property database.connection.url, see nevisMeta configuration properties for MariaDB]. It is called nevismeta for the sake of simplicity in this guide. Unless otherwise stated, the instructions have to be performed on both hosts.

Hint

The installation of MariaDB is not discussed here. For instructions, refer to the online documentation of MariaDB. Note that Nevis offers a database appliance that comes bundled with a pre-installed MariaDB package.

Step-by-step setup of database replication

Prepare schema, user, tables, and constraints on both database servers:

Create the schema, user, tables, and constraints as described in Creating a new database for nevisMeta on one server.

Make a copy of the newly created database. For details, see Backup persisted data on a MariaDB server.

Apply the database copy to the other database server:

mysql -u umet01 -p nevismeta < <path to the database copy file>

Ensure that you can remotely access the database specified in the property database.connection.url with the user specified in the property database.connection.username (in the nevismeta.properties file):

mysql -h <hostname of the remote database server> -P 3306 -u umet02 -p nevismeta

If the previous command does not work, follow (https://mariadb.com/kb/en/library/configuring-mariadb-for-remote-client-access/) for configuring remote client access on MariaDB.

- Grant permission to the nevisMeta user to check the replication status on each database server:

GRANT REPLICATION CLIENT ON *.* TO 'umet01'@'localhost';

- Create the replication user on each database server:

CREATE USER IF NOT EXISTS 'replication_user'@'<hostname of the other database server>' IDENTIFIED BY 'replicationpassword';

GRANT REPLICATION SLAVE ON *.* TO 'replication_user'@'<hostname of the other database server>';

FLUSH PRIVILEGES;

- Create the user for binary log monitoring on each database server:

CREATE USER IF NOT EXISTS binarylog_user IDENTIFIED BY 'binarylogspassword';

GRANT SUPER ON *.* TO binarylog_user;

Configure the MariaDB service. Add the following entries to the file /etc/my.cnf as super user on each database servers. The two configuration files (host-db1 and host-db2) differ at some points. The different lines are marked with (*).

Configure the MariaDB service on host-db1:

[mariadb]

# Enabling binary log

log-bin

# The ID of this master (*)

server_id=1

# The ID of the replication stream created by this master (*)

gtid-domain-id=1

# The basename and format of the binary log (*)

log-basename=nevismeta-primary-master

binlog-format=MIXED

# Setting for which database is replicated

replicate_wild_do_table="nevismeta.%"

# Avoiding collisions of primary IDs for tables where the primary ID is auto-incremented

# Auto-increment value

auto_increment_increment=2

# Auto-increment offset (*)

auto_increment_offset=1

# Suppressing duplicated keys errors for multi-master setup

slave_exec_mode=IDEMPOTENT

# Ignoring some data definition language errors

slave-skip-errors=1452, 1062

# Suppressing binary logs after a delay regardless of the replication status

expire_logs_days=1

# Maximum number of connections

max_connections=1000

# Size of each of the binary log files (default: 1GB)

max_binlog_size=500M

# Enabling writing to the DB in parallel threads for the replication

slave-parallel-threads=10

# enabling semi-synchronous replication

rpl_semi_sync_master_enabled=ON

rpl_semi_sync_slave_enabled=ON

# change to READ COMMITTED

transaction-isolation=READ-COMMITTEDConfigure the MariaDB service on host-db2:

[mariadb]

# Enabling binary log

log-bin

# The ID of this master (*)

server_id=2

# The ID of the replication stream created by this master (*)

gtid-domain-id=2

# The basename and format of the binary log (*)

log-basename=nevismeta-secondary-master

binlog-format=MIXED

# Setting for which database is replicated

replicate_wild_do_table="nevismeta.%"

# Avoiding collisions of primary IDs for tables where the primary ID is auto-incremented

# Auto-increment value

auto_increment_increment=2

# Auto-increment offset (*)

auto_increment_offset=2

# Suppressing duplicated keys errors for multi-master setup

slave_exec_mode=IDEMPOTENT

# Ignoring some data definition language errors

slave-skip-errors=1452, 1062

# Suppressing binary logs after a delay regardless of the replication status

expire_logs_days=1

# Maximum number of connections

max_connections=1000

# Size of each of the binary log files (default: 1GB)

max_binlog_size=500M

# Enabling writing to the DB in parallel threads for the replication

slave-parallel-threads=10

# enabling semi-synchronous replication

rpl_semi_sync_master_enabled=ON

rpl_semi_sync_slave_enabled=ON

# change to READ COMMITTED

transaction-isolation=READ-COMMITTEDRestart the mariadb servers on both hosts:

sudo systemctl restart mariadb

Semi-synchronous replication

By default, MariaDB uses asynchronous replication. To reach more consistency, it is recommended using semi-synchronous replication.

The database configurations previously shown enable semi-synchronous replication with the following lines:

rpl_semi_sync_master_enabled=ON

rpl_semi_sync_slave_enabled=ON

MariaDB versions before 10.3.3 require the installation of plug-ins for semi-synchronous replication and are not supported.

Start-up of replication

To start the replication, log in as root into your MariaDB client and run the following commands.

- On host-db1 (points to the master on host-db2):

Setting master on host-db1

CHANGE MASTER TO

MASTER_HOST='host-db2',

MASTER_USER='replication_user',

MASTER_PASSWORD='replicationpassword',

MASTER_PORT=3306,

MASTER_USE_GTID=current_pos,

MASTER_CONNECT_RETRY=10;

- On host-db2 (points to the master on host-db1):

Setting master on host-db2

CHANGE MASTER TO

MASTER_HOST='host-db1',

MASTER_USER='replication_user',

MASTER_PASSWORD='replicationpassword',

MASTER_PORT=3306,

MASTER_USE_GTID=current_pos,

MASTER_CONNECT_RETRY=10;

- On host-db1:

Starting replication on host-db1

START SLAVE;

- On host-db2:

Starting replication on host-db2

START SLAVE;

Verify on both hosts that the data replication runs properly:

SHOW SLAVE STATUS\G;

# then look for these both values

# Slave_IO_Running: Yes

# Slave_SQL_Running: Yes

Once both MariaDB servers start replication successfully, configure two database hosts in the property database.connection.url and restart nevisMeta. See also nevisMeta configuration properties for MariaDB further above.

database.connection.url=jdbc:mariadb:sequential//host-db1:3306,host-db2:3306/nevismeta

We recommend using sequential fail-over mode, which will sequentially use the database nodes in the provided order, one at the time.

The connection handling to a database in nevisMeta is lazy. If one of the database nodes fails, the system continuously moves each connection to the other node. The same happens if the failing database node recovers. That is, the connections are continuously re-initiated to the recovered node as they age out.

Additional setup

Purging the binary logs

With the provided configuration (expire_logs_days=1 in the MariaDB settings), the system will automatically remove binary logs that are older than one day, even if the logs were not copied by the slave. This prevents the disk of the master node from being filled up in case the slave is down for a long time. The automatic binary log removal takes place when

- the master DB node starts,

- the logs are flushed (nevisProxy does not use this feature),

- the binary log rotates, or

- the binary logs are purged manually (see below).

So binary logs older than one day can exist, if none of the listed actions occurred recently.

Complementary to this expiration feature, MariaDB provides the possibility to manually purge the binary logs. The purge action removes all binary logs that were already copied by the slave. This allows a safe removal of the binary logs on a regular basis. The nevisProxy package is delivered with an adaptable purging script, which is located at: /opt/nevisproxy/sql/mariadb/purgebinarylogs.sh

To use this script,

- copy the script to a location of your choice, and

- adapt it to your configuration.

The script takes care of both DB nodes, so that it only needs to be configured once. You can schedule the script to run for example once per hour, with a cron job:

/etc/crontab

0 * * * * /var/opt/nevisproxy/confluence/conf/purgebinarylogs.sh # Absolute path of your adapted script

Size the binary logs

The provided configuration (max_binlog_size=500M in the MariaDB settings) allows you to configure the maximal size of the binary log files before rotating. The smaller the size, the more often rotations will occur, which will slow down replication. The advantage is a more efficient purge process. The bigger the size, the less often rotations will occur, but the disk may be filled with old logs.

According to our experiences, a size less than 8K does stop replication completely under heavy load, because the slave keeps rotating the logs.

Troubleshooting

There is a common issue with configuring MariaDB to allow remote client access. If you encounter this problem, have a look at this useful article from MariaDB: Configuring MariaDB for Remote Client Access.

Usually the slave stops replication if an error occurs. You can check the state of the slave with the following sql command:

show slave status\G

If replication has stopped, usually the error that caused it will be displayed. First you should try to fix the error. If this is not possible, you can do a "forced" restart of the slave like this:

- On the master call (to display the current state of the master):

MariaDB [replicated_session_store]> show master status\G

*************************** 1. row ***************************

File: nevismeta-primary-master-bin.000131

Position: 194630804

Binlog_Do_DB:

Binlog_Ignore_DB:

1 row in set (0.00 sec)

- On the slave (using the values returned by the above call "show master status\G" on the master):

STOP SLAVE;

CHANGE MASTER TO

MASTER_LOG_FILE='nevismeta-primary-master-bin.000131',

MASTER_LOG_POS=194630804;

START SLAVE;

In this way, the system will restart the slave, without replicating to the slave all data manipulation that occurred from the moment replication has stopped until now.

Database migration with MariaDB

The database schema may evolve over time. Migration of the database schema is automatically done when a new version of nevisMeta connects for the first time to a database with an old database schema.

It is strongly recommended that you back up the database before you upgrade nevisMeta. This is because earlier versions of nevisMeta may not be able to connect to the database anymore after the completion of the automated migration.

Couchbase Persistence Backend

Deprecated . Migration to MariaDB is recommended.

If you want to use Couchbase as a data store, provide a Couchbase cluster yourself, as this is not part of this product. Furthermore, it is your responsibility to set up, configure and operate the database.

Before configuring a nevisMeta instance to use a Couchbase cluster, a bucket on a running Couchbase cluster needs to be created.

This chapter does not guide through the setup of Couchbase servers. If step-by-step instructions to install a new Couchbase setup are needed, refer to the Couchbase documentation.

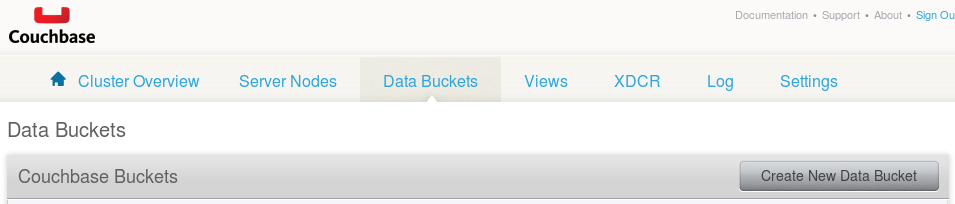

Once a Couchbase server is running, open its administration web console (typically at http://<host>:8091).

Clustering

To design the Couchbase cluster (specifically the number of nodes), consider the use cases the authentication flow relies on. AdNovum recommends using at least two nodes in situations where data loss can be tolerated, and up to five nodes for setups where the data availability is critical.

Advanced Couchbase features

Couchbase is an advanced distributed document storage solution that supports many interesting and useful features such as cross datacenter replication, statistics and monitoring. Take the time to read up on it in the Couchbase online documentation.

Setting up buckets

In Couchbase, associated data is grouped into buckets. Each group of nevisMeta instances that should share their data must have a dedicated bucket. This is done by selecting the tab Data Buckets and by clicking the button Create New Data Bucket:

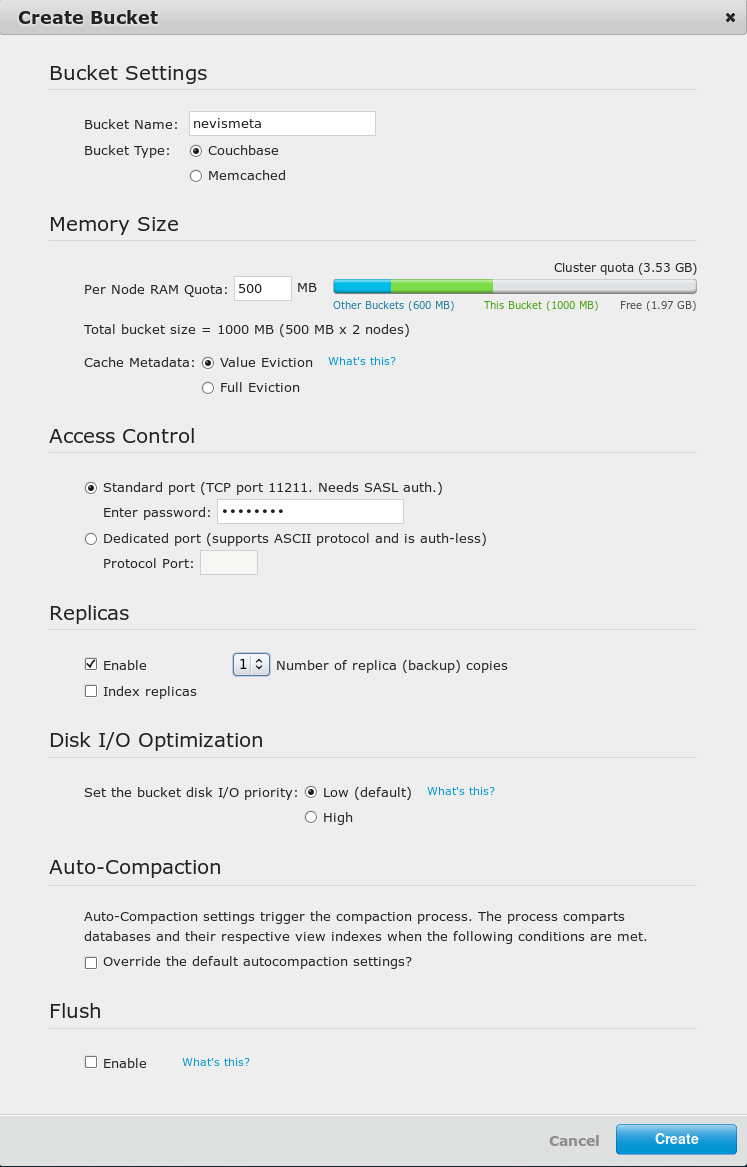

Couchbase then prompts to configure the new bucket. The important settings to consider are:

- Bucket Type: The option "Couchbase" needs to be selected (nevisMeta uses the higher-level document store interface provided by Couchbase buckets rather than the low-level Memcached protocol).

- Per Node RAM Quota: The RAM allocated to a bucket defines how much data the bucket can keep in-memory rather than having to load it from the file system. Because the amount of data that nevisMeta instances store in the database is typically small, this setting doesn't need to be excessively sized. AdNovum recommends starting with a configuration of 500 MB and to adjust this setting in case unsatisfactory response times in performance tests should be observed.

- Access Control: The option "Standard port" needs to be selected and a password for this bucket provided to protect the data from unauthorized access.

- Replicas: AdNovum recommends holding at least one replica (or more, for productive setups) in order that a failure of a single node in the Couchbase cluster doesn't lead to a complete loss of the data.

The following screenshot is an example of what a new bucket configuration might look like:

Once the bucket is created, configure the nevisMeta instances to use the bucket. For more information, see further below Configuring nevisMeta to use Couchbase as persistence backend.

After a restart, the nevisMeta instance will connect to the configured Couchbase nodes and maintain a connection to the cluster even after an outage of all configured nodes as long as the cluster is still running.

Configuring nevisMeta to use Couchbase as persistence backend

You configure the use of Couchbase as the persistence backend in the file nevismeta.properties:

database.type

Value: couchbase

Persistence backend type, set to couchbase to indicate that Couchbase is used.

store

Value: default: couchbase:http://<host>:<port>/<bucket>?password=<password>

couchbase:http://<host>:<port>/<bucket>?password=<password>

where:

<host>: hostname of Couchbase server<port>: default 8091<bucket>: name of the bucket to be used<password>: password of the bucket (if set)